– Real-time ray tracing in games is picking up speed and the new Xbox Series X and PlayStation 5 game consoles are almost certain to break through. But how do GPUs actually accelerate the calculation of the rays? An insight into the technology provides information.

Real-time ray tracing in hardware

It has been 13 years since guest author Daniel Pohl reported on his research work in this area in the first part of his series "Raytracing in Games", which was followed over four years on BitcoinMinersHashrate. As a diploma thesis, he had implemented Quake 4 (and previously Quake III Arena) in ray tracing and discussed the advantages and disadvantages of rendering with ray tracing. At that time the calculation was done by the CPU, because ray tracing was implemented “in software”.

This was followed by further articles on the latest developments and the ray tracing implementation of Enemy Territory: Quake Wars as well as an outlook on what would be possible with ray tracing in the cloud. In the meantime, Pohl was driving the issue forward as an employee of Intel. Since the beginning of 2012, things have become quieter.

Since the end of 2018 and the launch of the Nvidia GeForce RTX graphics cards, the calm has been over. Ray tracing has always been described as the holy grail of graphics. Since Nvidia Turing has mastered the technology “in hardware” and raytracing in PC games has been used for the first time, the topic has been omnipresent.

There are currently only five AAA games that use individual effects calculated using ray tracing to enable more realistic displays: Battlefield V, Shadow of the Tomb Raider, Metro: Exodus, Call of Duty: Modern Warfare and Control. With Xbox Series X and PlayStation 5 based on AMD's RDNA2 architecture, the topic will undoubtedly pick up speed again at the end of the year and the breakthrough is virtually certain in the medium term. So enough time to take a very current look at the implementation of ray tracing in games.

The core of the elaboration is a scientific article from 2017: "Toward Real-Time Ray Tracing: A Survey on Hardware Acceleration and Microarchitecture Techniques" by researchers from Qualcomm and Tsinghua University in China was published in the Journal ACM Computing Surveys and is freely available as a PDF on Github. At the time, the researchers were very certain that raytracing in hardware would enter the mass market within a few years – at least they were definitely not wrong at the time. Will they be right in other aspects as well?

We envision that a wave of research efforts on ray-tracing hardware will blossom in a few years.

Rasterization is currently the standard

If a two-dimensional image is created on a PC as the output of a three-dimensional scene, this is called "rendering". There are several ways to render images. Nowadays, so-called rasterization predominates in computer games. But what exactly is behind it?

In the first step, a three-dimensional scene is broken down into polygonal (polygonal) base areas, which are also called "primitives". During rasterization, the polygons are broken down into fragments that correspond to the later pixels. The individual pixels are then assigned color and brightness values, known as “shading”. The finished image is then output.

Rendering on the basis of rasterization enables a high degree of parallelization of the calculation of the image information, since shading can be carried out in parallel for all polygons. On modern graphics cards with several thousand shaders, very complex scenes with many effects can be displayed simultaneously. The simple parallelization of the rendering process is the fundamental advantage of rasterization.

Rasterization is quick because it simplifies

However, the fundamental prerequisite for successful rendering is that the polygons are independent of one another and global parameters such as the lighting of the scene and thus the shadow cast have been calculated and saved in advance. The realistic representation of cross-polygon effects such as global and indirect lighting, shadows, refractions and reflections is a bottleneck. In the end, they can only be created convincingly if several polygons are viewed at the same time, which can lead to considerable performance loss on conventional graphics cards. In particular, light and shadow are always only a very complex approximation in the development process – albeit at least at first glance a very good one.

In order to further promote realism in computer games with rasterization, ever more extensive tricks are used to make the task suitable for the rasterizer. Examples of this are screen area reflections, ambient occlusion and global lighting.

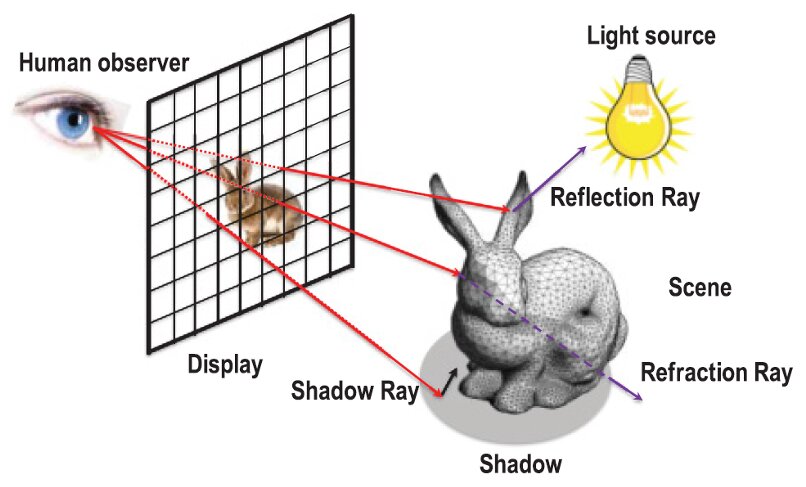

However, the alternative rendering method ray tracing is more suitable for such effects, in which light rays are shot at the individual pixels from the camera perspective and, based on this, reflections, scatterings or refractions of the rays are recorded. Ray tracing has long been used to display photo-realistic images. It is the most prominent method for CGI effects for feature films and "Computer-Aided Design" (CAD). It offers immense advantages, but also disadvantages.

How does ray tracing work?

Ray tracing emulates human perception. In reality, light beams are scattered or reflected from objects and then reach the human eye. In the ray tracing algorithm you go the opposite way and send light rays from the viewer to the scene. If these primary rays hit a surface, different secondary rays are formed depending on the property, which calculate shadows or reflections and, in the case of transparent objects, light refraction. Additional subordinate rays can be emitted if necessary, for example in the case of multiple reflections.

From the basic type of algorithm, ray tracing is optimal for many cores, since the rays can be calculated in parallel. However, RAM access is asynchronous and difficult to predict, which is why ray tracing works less efficiently on conventional graphics card shaders than rasterization. This shows a vivid example of a complex scene with more than a million polygons.

Of the theoretical output of 1.1 billion beams per second (GigaRays / s) that a traditional GTX 1080 Ti can calculate, only 300 million beams actually calculated remain due to the load caused by memory access. Without hardware acceleration, ray tracing is therefore difficult to implement on graphics cards. The benchmark Neon Noir (https://www.computerbase.de/2019-11/crytek-neon-noir-community-benchmark/) developed by Crytek shows that it is still possible. However, it is not certain to what extent effects in this benchmark were better optimized by the constant same camera movement than is possible in real games.

The fundamental algorithm for ray tracing was presented by Turner Whitted at the Siggraph conference in 1980. As with rasterization, the starting point is a scene broken down into polygons. The algorithm consists of a loop, which in the best case counts through all pixels of the camera perspective and emits one primary beam each. With a resolution of 4K and 60 FPS, that's almost 500 million primary beams per second. For higher image quality, several primary beams per pixel can be fired from slightly different positions. The following steps then apply to each primary beam:

- The polygon that is first hit by the beam is determined.

- It is checked whether the spot is in the shade by firing a secondary beam for each light source.

- If the surface is reflective, a corresponding secondary beam is fired.

- If the surface is transparent, a broken secondary beam is fired.

- Once all the brightness information has been collected, it is passed along with the color of the polygon for shading.

The first step is the one that Nvidia accelerates using its RT cores, with the task being transferred from the streaming multiprocessor to the RT core. It takes the most time in conventional calculations because the complexity increases proportionally with the increasing number of rays and polygons. Steps 2 to 4 generate several secondary beams, which is why many GigaRays / s of ray tracing performance can quickly become necessary even with only one primary beam per pixel. Trees with a high proportion of transparent textures are a particularly difficult scene, as was clearly illustrated in an earlier article on ray tracing. The question is how the process can be accelerated efficiently and without too much loss of quality.

On the next page: Accelerate ray tracing in hardware