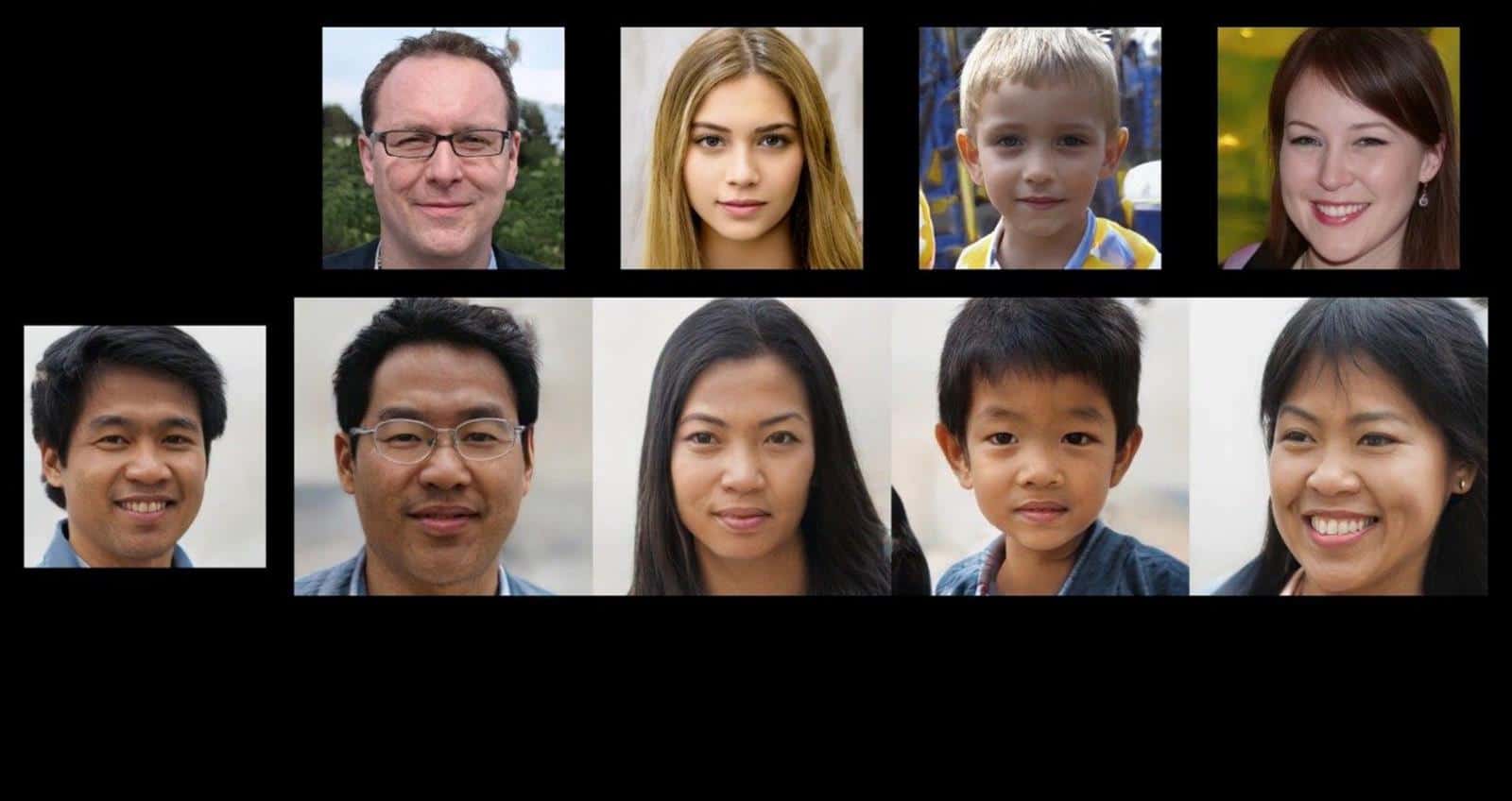

Diary Proceedings of the National Academy of Sciences got rich last week with a publication that directly points to how real the faces of people… who never existed can seem. As part of this experiment, it was proved that artificial intelligence has generated faces that deceive most of us.

NVIDIA’s artificial intelligence has generated faces that are once again awakening the fear of deepfake

In this study, over 300 participants were asked to determine whether a given photo is a real person or a fake that was generated by artificial intelligence. Results? You can see them above, where the percentages represent the effectiveness of pointing to AI-generated (S) and real (R) people, presenting in the first two lines the most reliable photos and the least in the two from the bottom.

It follows that people were able to indicate well less than in half of the cases (48.2%). This means that if the Internet was flooded with deepfake creations without any control, it would be better to toss a coin when judging people visible in photos or recordings than to judge by yourself whether a given photo represents someone real or just an AI creature. Even if we know how to evaluate artificial photos, because even with this knowledge, the result of 48.2% jumped to only an average of 59% efficiency in distinguishing the real photo from a fake.

Also read: Be careful with your cryptocurrency wallets. Kraken is already waiting by bypassing Windows Defender

According to the study, “this feat of engineers should be considered a success in the field of computer graphics” and at the same time calls for “consideration of whether the associated risks exceed the benefits.” This applies to the entire spectrum of threats, from disinformation campaigns to the generation of pornographic content.

Perhaps the most damaging thing is that in a digital world where any image or video can be counterfeited, the authenticity of any inconvenient or unwanted recording can be questioned.

– say the researchers.