GPUs that design GPUs with artificial intelligence: NVIDIA is doing it

Bill Dallychief scientist and senior vice president for research NVIDIAillustrated the solutions of artificial intelligence that the company is developing and uses internally to improve its products. Such as reported by HPC WireNVIDIA began use AI to improve and speed up the design of your GPUs.

“It is only natural, as AI experts, that we would like take that AI and use it to design better chips. We do this in a couple of different ways. The first and most obvious is that we can take the CAD tools that we have and embed the AI in them, “explains Dally.” For example, we have one that takes a map of where power is being used in our GPUs and predicts to what extent. the mains voltage drops. Doing this with a traditional CAD tool takes three hours“noted Dally.

“Because it’s a repetitive process, it becomes very problematic for us. What we would like to do instead is train an AI model to get the same data; we do it on a bunch of projects, and then we can basically feed the power map. The resulting inference time is only 3 seconds. Of course, it comes down to 18 minutes if you include time for feature extraction (feature extraction)”.

“We can get results very quickly. Instead of using a convolutional neural network, we use a graph neural network to estimate the frequency with which different nodes in the circuit switch, and how this affects the power supply of the previous example. Plus, we’re able to get really accurate power estimates much faster than conventional tools and in a tiny fraction of time, ”said Dally.

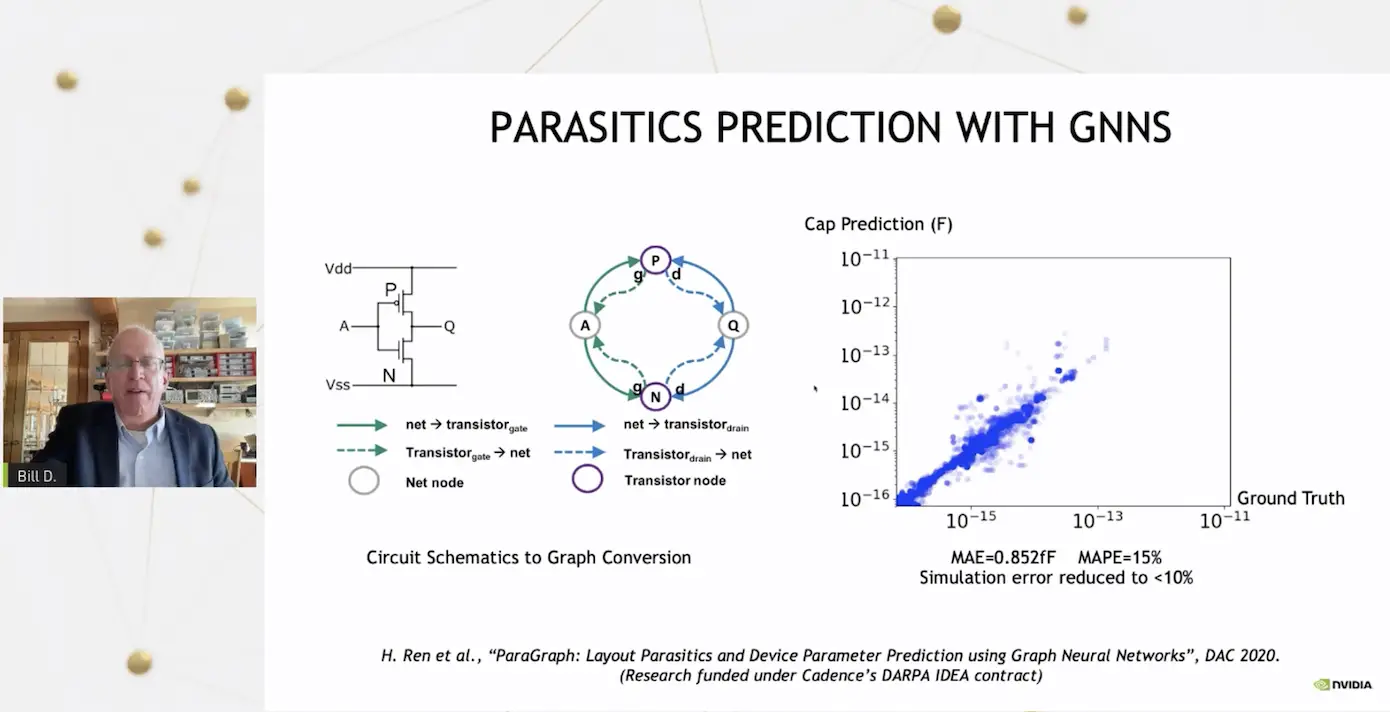

The chieft scientist, for example, is working for predict the parasitic elements of a printed circuit through graph neural networks. “In the past designing a circuit was a very repetitive process of drawing a schematic, just like this picture above with the two transistors. But you wouldn’t know how it would behave until a layout designer took that schematic and made the layout, extracted the parasitic elements and only then could you run the simulations of the circuit and find that you were not meeting some specifications “.

In the worst case, you had to change the pattern many times and repeat the process, consuming a lot of time and energy. “Now what we can do is train neural networks to predict what parasitic elements will be without having to make the layout“NVIDIA has been able to get a very accurate prediction of parasitic elements, which speeds up one step in GPU development.

Another area where NVIDIA uses AI is for prevent congestion of the slopesor points where “there are too many wires trying to pass along a certain area, a sort of traffic jam per bit. What we can do is […] use a graph neural network to predict where congestion will occur quite accurately. “At the moment the technology is not perfect, but it gives a rough indication of where to act.

At NVIDIA, however, they do not use AI only to submit a project made by humans to them in order to optimize it. Another area of research is using AI to design. The first system, called NVCelluses a combination of simulated annealing and reinforcement learning to design the cell library that is used, for example, to switch a project from the 7-nanometer to the 5-nanometer process. “That’s many thousands of cells that need to be redesigned for the new process with a very complex set of design rules,” Dally explained.

“Basically we do this by using reinforcement learning to position the transistors. But more importantly, after they are placed, there are usually a lot of errors in the design rules and it becomes almost like a video game. something reinforcement learning is good at. […] By correcting these errors with reinforcement learning, we are able to fundamentally complete the design of our standard cells. “

In one test, the solution adopted was able to correctly build 92% of the cell library with no design rules or errors regarding electrical rules. “This on the one hand is a huge labor savingon the other – in many cases – we get better design“. This job, done by 10 people, would keep them busy for a good part of the year:” Now we can do it with a couple of GPUs running for a few days. Human beings can work on that 8% of the cells that were not made automatically. “