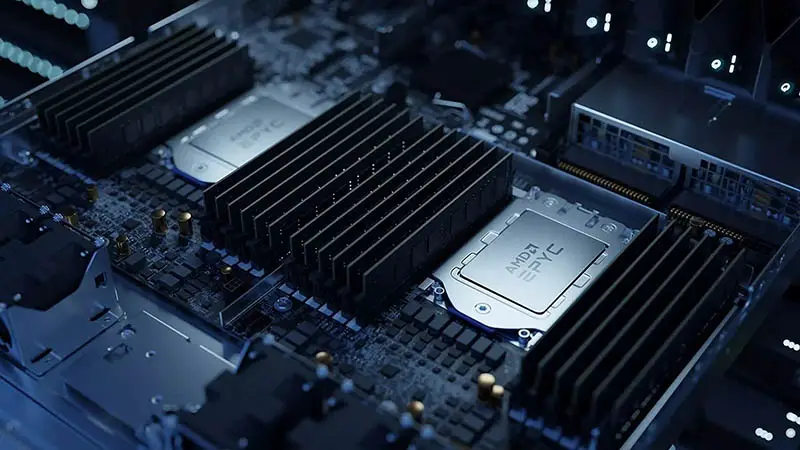

EPYC processors are being a total success for AMD thanks to their huge number of cores, support for 8 channels of RAM, and an impressive I / O with up to 128 PCI-Express 4.0 lanes, and the latest company to adopt them was Netflix, who chose them thanks to their ability to transmit up to 400Gb / s of video per system, something that could not be achieved with any competitive alternative.

The details on these new servers come from the hand of Netflix Senior Software Engineer Drew Gallatin, who revealed during the EuroBSD 2021 event the process of choosing components and software configuration to achieve this 400Gb / s data flow, and the list of final components that they ended up implementing in each system to achieve this incredible number.

According to Gallatin, after a long process of choosing components, the company chose to power each system with an AMD EPYC 7502p processor with 32 cores and 64 threads with Zen 3 architecture, 256GB of DDR4 memory at 3200MHz, 18 M.2 SSDs PCI-E 3.0 for Western Digital SN720 data centers, and two Nvidia Mellanox ConnectX-6 Dx network adapters with PCI-E 4.0 x16 connectivity, which support two connections of 100Gb / s each, for a total of 400Gb / s. s between the two adapters.

Having the hardware ready, the problem now was the configuration of the software and the BIOS, since by default this system only reaches a maximum bandwidth of 240Gb / s. The first step was to play with the NUMA (Non-Uniform Memory Architecture) configurations, since without this technology the Infinity Fabric bus was saturated and made a bottleneck that limited the maximum bandwidth, so the system was configured to use four NUMA nodes, which basically separates the DIEs into four groups and each group only accesses the memory controlled by these DIEs to avoid having to go through the Infinity Fabric, avoiding the bottleneck when all DIEs ask to go through the Infinity Fabric constantly. This change allowed to raise the bandwidth to 280Gb / s, which is an improvement, although it is not yet even close to the 400Gb / s that the company was looking to achieve, so the adventure does not end.

After studying the limitation that prevented 280Gb / s from being exceeded, Netflix engineers noticed that most of the CPU use was for TLS encryption, a function that the Melanox network cards used by this system can perform, so that derived this functionality to the network cards, and there came the magic. With this change, it was possible to achieve a data flow of 380Gb / s, getting closer and closer to the goal of 400Gb / s, which they reached after making different adjustments in software and BIOS that were not detailed, reaching the maximum limit of your network cards and therefore the limit of the system.

With these systems capable of reaching a 400Gb / s data flow ready, Netflix’s goal is now to reach 800Gb / s per system, although they did not receive the necessary components in time for the EuroBSD 2021 conference, so they have not yet They were able to carry out tests and therefore cannot tell us their results. It will be interesting to see how they manage to achieve this huge goal of 800Gb / s per system, since the complications to reach 400Gb / s were several, and therefore duplicating this data flow will not be an easy task.

What do you think about these amazing AMD EPYC-based Netflix servers? Do you think they will soon be able to reach 800Gb / s on their new servers?

Source: TechSpot