Today, Microsoft announces a new version of its gaming and multimedia API platform, DirectX. The new version, DirectX 12 Ultimate, what Greatly unifies Windows PCs with upcoming Xbox Series X platform, offering the platform’s new precision rendering features to Windows gamers with compatible video cards.

Many of the new features have more to do with the software development side than the hardware. New DirectX 12 Ultimate API calls not only allow access to new hardware features, but offer deeper, lower-level, and potentially more efficient access to hardware features and resources that are already present.

For now, the new features are largely programmed only for Nvidia cards., with “full support on GeForce RTXThe presentation you are viewing slides from actually came from Nvidia, not Microsoft. Meanwhile, AMD has announced that its upcoming RDNA 2 GPU listing will have “full support” for the DirectX 12 Ultimate API, but not any previous generation AMD cards. (AMD takes the opportunity to remind players that the same RDNA 2 architecture is powering Microsoft’s Xbox Series X and Sony’s PlayStation 5 consoles.)

Some of the new calls recall the work that AMD has done independently on the Radeon controllers. For example, variable speed shading seems similar to AMD’s Radeon Boost system, which dynamically reduces frame resolution during fast pan. While these features are certainly not the same, they are similar enough in concept that we know that AMD has at least been thinking of similar lines.

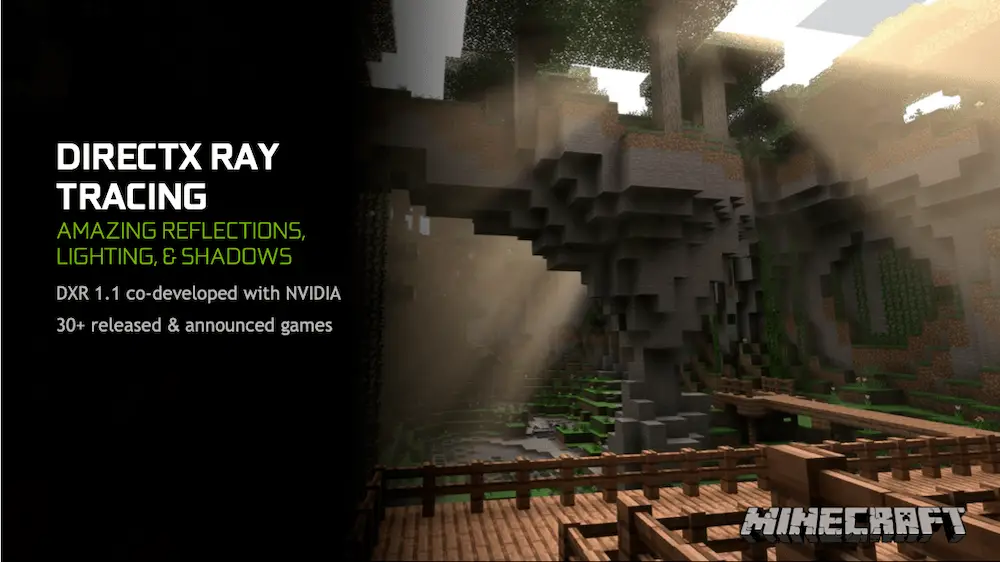

DirectX Ray Tracing

DirectX Ray Tracing, also known as DXR, is not new: DXR1.0 was introduced two years ago. However, DirectX 12 Ultimate introduces several new features under a DXR1.1 version scheme. None of the DXR1.1 features require new hardware; existing GPUs with ray tracing capabilities simply need driver support to enable them.

At this time, only Nvidia offers customer-facing PC graphics cards with hardware ray tracing. However, the Xbox Series X will offer ray tracing on its custom Radeon GPU hardware, and AMD CEO Lisa Su said she expects discrete Radeon graphics cards with ray tracing support “as we go through 2020” at CES2020. in january.

Inline Ray Tracing

Inline ray tracing is an alternative API that allows developers lower-level access to ray tracing pipe than DXR1.0 dynamic shader based ray tracing. Instead of replacing the ray tracing of the dynamic shader, the ray tracing online It is present as an alternative model, which can allow developers to make low-cost ray tracing calls that do not have the full weight of a dynamic shader call. Examples include restricted shadow calculation, shader queries that do not support dynamic shader rays, or simple recursive rays.

There is no simple answer to when Inline ray tracing is more appropriate than dynamic; developers will need to experiment to find the best balance between using both sets of tools.

DispatchRays () via ExecuteIndirect ()

Shaders running on the GPU can now generate a call list DispatchRays (), including its individual parameters. This can significantly reduce latency for scenarios that immediately prepare and generate ray tracing work on the GPU, as it eliminates a round trip to the CPU and vice versa.

Growing state objects through AddToStateObject ()

Under DXR1.0, if developers wanted to add a new shader to an existing ray tracing pipe, they would have to create an entirely new pipe instance with an additional shader, copying the existing shaders to the new pipeline along with the new pipeline. This required the system to parse and validate the existing shaders, as well as the new one, when instantiating the new pipeline.

AddToStateObject () Eliminate this waste by doing exactly what it sounds like: allowing developers to expand an existing ray tracing pipeline instead, requiring only parsing and validating the new shader. The efficiency increase here should be obvious: a pipeline of 1,000 shaders that needs to add a single new shader now only needs to validate one shader, instead of 1,001.

GeometryIndex () in Ray Tracing Shaders

GeometryIndex () enables shaders to distinguish geometries within lower-level acceleration structures, without the need to change the data in the shader registers for each geometry. In other words, all geometries in a lower level acceleration structure can now share the same shader record. When necessary, shaders can use GeometryIndex () to index the application’s own data structures.

Developers can optimize ray tracing pipelines by omitting unnecessary primitives. For example, DXR1.0 offers RAY_FLAG_SKIP_CLOSEST_HIT_SHADER, RAY_FLAG_CULL_NON_OPAQUE, and RAY_FLAG_ACCEPT_FIRST_HIT_AND_END_SEARCH.

DXR1.1 adds additional options for RAY_FLAG_SKIP_TRIANGLES and RAY_FLAG_SKIP_PROCEDURAL_PRIMITIVES.

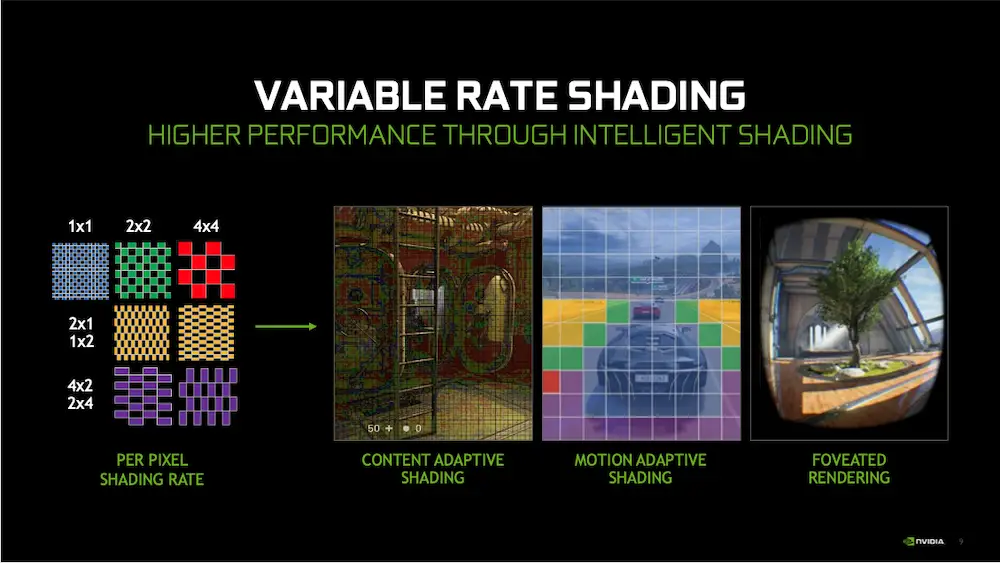

Variable Rate Shading (VRS)

The variable rate shading (VRS) announces itself as “a scalpel in the world of decks.” VRS allows developers to select the shading rate in frame portions independently, focusing most of the details and rendering workload on the portions that really need it and leaving the background or elements visually unimportant to render more quickly.

There are two levels of hardware for VRS support. Tier 1 hardware can implement hatch rates by lot, which would allow developers to draw large, far, or darkened assets with lower hatch details, and then draw detailed assets with higher hatch details.

If you know that a first-person shooter will pay more attention to your crosshair than anywhere else, you can have the maximum shading detail in that area, gradually dropping to the lowest shading detail in peripheral vision.

A real-time strategy or RPG developer, on the other hand, might choose to focus additional shading details on the edge limits, where alias artifacts are more prone to sight.

The primitive VRS takes things a step further, by allowing developers to specify the shading rate per triangle. An obvious use case is for games with motion blur effects:why bother representing detailed shadows on distant objects if you know you’re going to blur them anyway?

Screen space and primitive variable speed shading can be mixed and matched within the same scene, using VRS combiners.

Mesh and Amplification Shaders

The mesh shaders allow greater parallelization of the shading pipeline. A amplification shader It is essentially a collection of mesh shaders with shared access to the data of the parent object. Mesh shaders parallelize mesh processing using a computer programming model. The pieces of the overall mesh are separated into “meshes”, each of which typically consists of 200 vertices or less. Individual meshes can be processed simultaneously instead of sequentially.

Mesh shaders distribute a set of thread groups, each of which processes a different mesh. Each thread pool can access group shared memory but can generate vertices and primitives that do not need to be mapped to a specific thread in the pool.

This greatly reduces rendering latency, particularly for geometries with linear bottlenecks. It also allows developers much more granular control over separate pieces of the overall mesh rather than having to treat all geometry as a whole.

Amplification shaders are essentially collections of mesh shaders managed and instantiated as one. An amplification shader distributes groups of mesh shader threads, each of which has access to the amplification shader data.

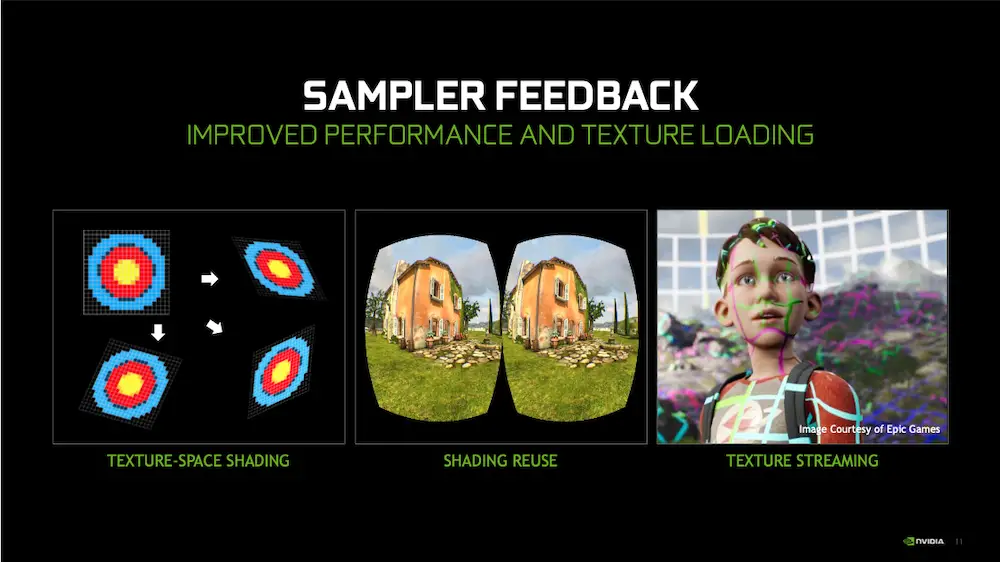

Sampler Feedback

The feedback sampler essentially makes it easier for developers to figure out at what level of detail to present textures on the go. With this feature, a shader can query what part of a texture would be needed to satisfy a sample request without having to perform the sample operation. This allows games to display larger, more detailed textures while using less video memory.

The texture-space shading expands on the sampler feedback technique by allowing the game to apply shading effects to a texture, regardless of the object the texture is wrapped around. For example, a cube with only three faces visible does not need lighting effects applied to the three rear faces.

Using TSS, lighting effects can be applied only to the visible parts of the texture. In our cube example, this could mean just lighting up the part that wraps around the three faces visible in the calculation space. This can be done before and independently of rasterization, reducing the alias and minimizing the computational expense of lighting effects.