Review and testing of the NVIDIA GeForce RTX 2080 video card: on the verge of the future of 3D graphics MHz | Power Supple | Overclocking | Benchmark – In mid-September of this year, NVIDIA presented to the public its new graphics processors based on the Turing architecture and video cards represented by the GeForce RTX 2080 Ti and RTX 2080. A little later, the GeForce RTX 2070 joined them on the market, finally forming a series of new graphics cards.

Since even by that time the already existing line of NVIDIA video cards (GeForce GTX 1080 Ti / 1080/1070) had no competitors in their classes, even more productive solutions from the company had to compete only with each other and impress fans of 3D graphics with new technologies. And, it must be admitted, it was really possible to surprise, as well as the prices.

In today’s article, we will take a look at the new Turing architecture using the example of the NVIDIA GeForce RTX 2080 Founders Edition video card, comparing it with the previous model represented by the original version of the GeForce GTX 1080.

NVIDIA Turing architecture – some theory

It’s no secret that in recent years, the performance of processors in typical home tasks and games has been marking time. The noticeable performance gains from each new generation are a thing of the past. Even the debut of AMD Ryzen, which later became very popular, could not qualitatively change something in this matter. Unlike central processors, the performance of video cards, which obeys other laws of development, has not stood still and has grown steadily with each new generation.

And more and more, the performance of the new generation of GPUs was limited by the central processor. Therefore, it is not surprising that when testing video cards, there was a tendency to move to ever higher screen resolutions and heavy anti-aliasing modes.

At the same time, in the statistics of Steamit can be seen that more than half of users (~ 60%) use monitors with a resolution of 1920 × 1080 pixels, and users with monitors with a resolution of 2560 × 1440 pixels or higher – only about 5%. Thus, an ordinary user, a fan of computer games, is squeezed from both sides. On the one hand, performance is limited by the processor, on the other, by the monitor resolution. All this makes the purchase of more productive and expensive video cards meaningless for the bulk of players.

The way out of this situation is the classic transition from quantity to quality. But what can you think of when more and more the quality of the picture on the monitor is due not to the performance of the video card, but to the ingenuity of programmers? who need to describe various phenomena of the world with algorithms and be able to correctly combine and apply them? All of these challenges must be addressed by the next-generation NVIDIA graphics cards, which have a new architecture codenamed Turing.

Turing = Volta + RT? Since the introduction of NVIDIA GeForce GTX 1080 graphics cards

almost 2.5 years have passed. Quite a long time for the industry. However, in this period of time, a new graphics architecture was introduced – Volta, which differs significantly from its predecessors – Maxwell and Pascal. For most users, this announcement went unnoticed, because this architecture was represented by only one chip – GV100, aimed at being used as a computing accelerator.

A little later, video cards of the Quadro and Titan families using this chip were presented, but even there the cost of solutions, starting at $ 2999 for the Titan V, scared off even very wealthy enthusiasts. Therefore, fans of computer games could get acquainted with this video card and architecture mainly through reviews on the Internet.

Turing, in turn, looks like a development, or rather even an offshoot of Volta. It does not have the same number of FP64 actuators, but adds new specific actuators and saved tensor kernels. We will tell you about all this in more detail.

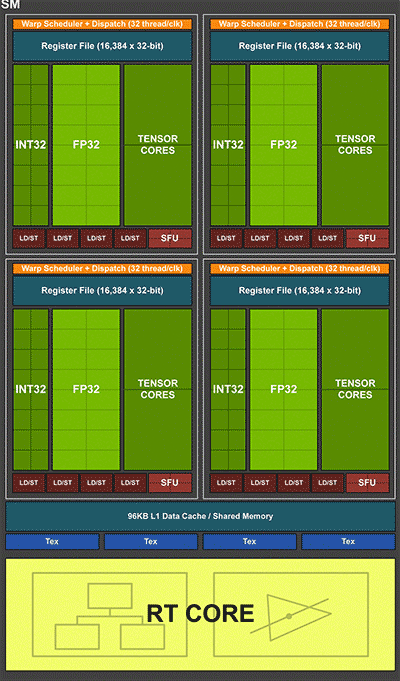

Globally, the Turing architecture is similar to its predecessors. The chip consists of a Graphics Processing Cluster (GPC), which are split into a Texture Processing Cluster (TPC), and the base unit is still the SM multiprocessor. And it was he who underwent many changes:

the tendency towards a decrease in the number of FP32 devices continued: in Kepler there were 192 of them, in Maxwell and Pascal their number was reduced to 128, and Volta and Turing offer 64 each. Thus, both the efficiency of the execution units and the number of TPCs in the chip increase, with a comparable number of FP32 units;

important changes have also taken place at the most basic level. Starting with the G80 (Tesla architecture), the base unit was the so-called CUDA core, capable of performing both floating point and integer operations. But only one operation at a time is integer (Int) or floating point (FP). Volta and Turing are ditching the CUDA unitary kernel in favor of separate arrays of actuation units for integer and floating point computations. This makes it possible to simultaneously execute these operations in parallel, which, according to NVIDIA estimates, can give a performance increase of about 35%;

increased size and performance of caches;

Volta and Turing also received specialized actuators called tensor cores, 8 pieces per SM. They are designed to speed up the performance of the increasingly popular neural networks;

Finally, the main distinguishing feature and pride of the Turing architecture are RT blocks, which allow to speed up the work of ray tracing algorithms many times over. This is a key change in the architecture of Turing, so important and global from NVIDIA’s point of view that the company even renamed its line of graphics cards from GTX to RTX.

RayTracing

So, it is obvious that NVIDIA has made a lot of efforts not only for the traditional increase in performance, but also for the sake of creating a groundwork for qualitatively new features that were not possessed by video cards of previous generations. There were not so many similar milestones that qualitatively change 3D graphics in the history of the development of video cards. The first such change, which NVIDIA itself mentions, was the appearance of the Transform and lighting (T&L) block in the GeForce 256, which made it possible to transfer the work of calculating lighting from the central processor to the graphic one. Actually, it was this block that allowed the GeForce 256 to be called a graphics processor, and not just a 3D accelerator. The next milestone was the emergence of support for shaders in GeForce 3 video cards. Further development of this technology has qualitatively changed the ability of game developers to create various effects. Modern games simply cannot do without shaders. It is also worth noting the emergence of support for calculations on the GPU, which appeared in 2006 with the release of the G80 and the first version of CUDA. Modern games have also adopted this feature and shift some of the computational load to the GPU.

And now, finally, we got to the present day. NVIDIA has implemented special blocks in the Turing architecture that allow to radically raise the performance of ray-traced lighting algorithms to the point that they can be produced in real time. Milestone? Of course, and here’s why.

Traditionally, graphics in games have evolved by adding algorithms that simulate real-world phenomena. T&L, shaders, calculations …, all of this was subordinated to one goal – to bring the picture quality closer to the level that we see in real life, to achieve photorealism. This is not to say that all this was in vain, the difference in the quality of the picture produced by modern games and games 20 years ago is obvious. But, many users have the feeling that 3D graphics have reached a certain threshold in quality, which cannot be overcome.

This is partly true. NVIDIA believes that lighting is the key to enhancing image quality and realism. Today, all the effects seen in the scene are actually created manually. Lighting, reflection, diffusion. This is a time consuming process that requires a combination of many different algorithms, each of which is not perfect in itself. Developers should think about all the effects the player observes, and then write algorithms that will be responsible for them. Obviously, it is not possible to achieve the ideal in this way.

After all, the world consists of many different effects, both obvious and non-obvious. A programmer a priori cannot describe all of them reliably and in detail. And the human brain is able to distinguish a real picture from the one that games give out, relying precisely on little things and details. A programmer a priori cannot describe all of them reliably and in detail. And the human brain is able to distinguish a real picture from the one that games give out, relying precisely on little things and details. A programmer a priori cannot describe all of them reliably and in detail. And the human brain is able to distinguish a real picture from the one that games give out, relying precisely on little things and details.

In contrast to traditional rendering technologies in games, in the film industry and in professional applications, another method of calculating lighting is used – ray tracing. This method has been known and used for a long time in many fields of activity, where it is necessary to obtain the most realistic image. Its essence lies in the calculation of the trajectory of all rays of light in the scene. This allows you to naturally form all the necessary effects – shadows, highlights, reflections, diffusion. The programmer does not need to write an algorithm for each effect, nor does he need to place fake lights in the scene to make it look realistic. Simple enough to use ray tracing.

A reasonable question arises: if everything is so simple, then why is this method not used in games? Answer: Insufficient performance for real-time computation. Ray tracing is a very resource-intensive algorithm, where the calculation of one frame can take tens of seconds, while in games, on the contrary, it is required to receive tens (if not hundreds) of frames per second. NVIDIA has gone to great lengths to make ray tracing available in games as well. And we’re not just talking about the development and promotion of Turing video cards. Along with this, large-scale preparatory work was carried out. Microsoft has unveiled the DirectX Raytracing API to enable ray-tracing in games, and NVIDIA has helped developers bring support for the technology to games due out this year. So you don’t have to wait a few years

By the way, the presented Microsoft API for ray tracing allows you to perform ray tracing on existing video cards using traditional actuators. RT blocks in Turing allow hardware acceleration of these calculations at times, to achieve performance sufficient to carry out these calculations in real time. NVIDIA is introducing a new performance unit to measure performance: billions of rays per second (GRay / s).

How effective are RT blocks? For comparison, the GeForce RTX 2080 Ti has a performance of 10 GRay / s. And the performance of the GeForce GTX 1080 Ti without these blocks is only 1.1 GRay / s. The difference is more than 9 times. But in reality the difference in performance is promised to be about 5-6 times. And this is a qualitative difference. Thus, owners of the current generation of video cards will also be able to see and appreciate the beauty of the new lighting calculation algorithms. But to play in comfort, only the owners of GeForce RTX.

True, it should be noted that even in this form, the performance of modern video cards is not enough for full-fledged ray tracing in real time. Therefore, at the moment, hybrid rendering will be used, where traditional technologies and algorithms will be complemented by ray tracing. And over time, if this technology is accepted by the market and players, more and more will be placed on it and less on traditional methods. For players, this will mean an increase in the quality and realism of the image, and for developers, it will reduce the cost of developing many algorithms that add realism to the picture. Ray tracing will replace them all. Other changes

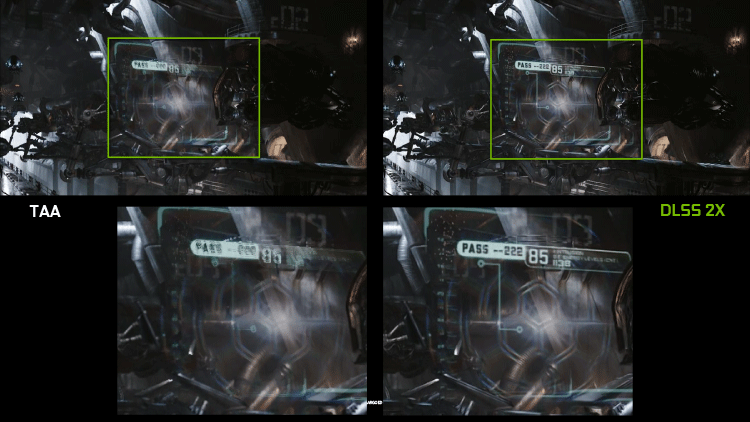

Now that it has become clear why NVIDIA has added ray acceleration units to Turing, it is necessary to figure out why tensor cores are needed in a gaming video card, which are needed to speed up the operation of neural networks. They are supposed to be used to improve the image quality and reduce the load on the main actuators. NVIDIA calls this technology Deep-Learning SuperSampling (DLSS), or neural network anti-aliasing. Games with the support of this technology can improve the image quality, in the same way as traditional methods of anti-aliasing would do, but the work here will be performed not by the usual ROPs, but by new tensor kernels, with the help of which the image quality will be improved, similar to anti-aliasing. This will allow you to get an even better image almost free of charge,

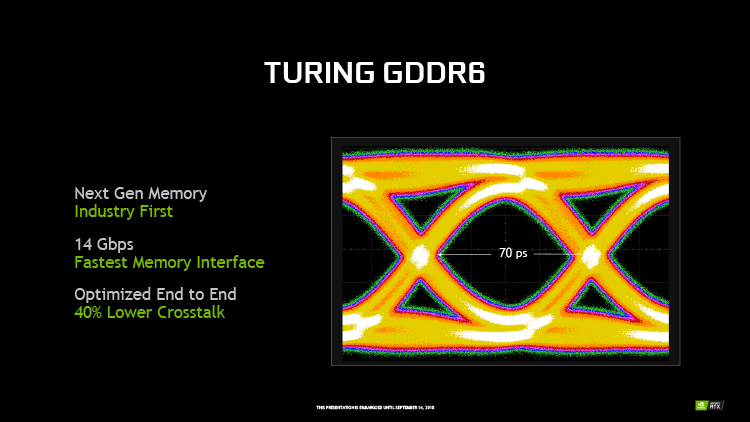

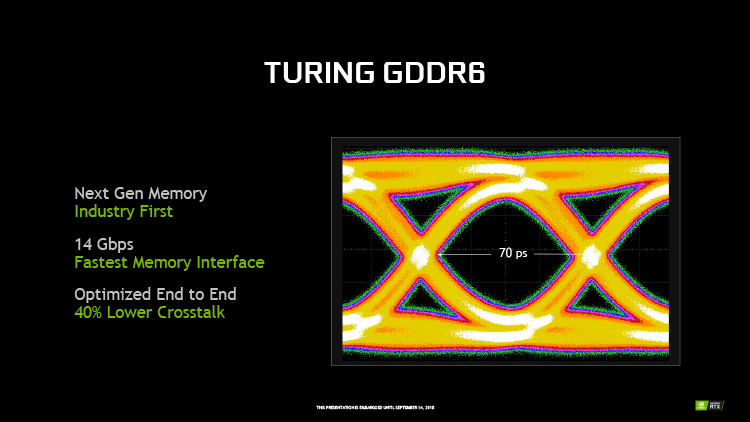

Another interesting innovation in Turing is support for Mesh Shaders. This is a new way of working with scene geometry, which replaces vertex shaders and tessellation. An important advantage of this technology is the reduced load on the central processor and the ability to control geometry more flexibly. Turing graphics cards support GDDR6 memory, which has higher operating frequencies than GDDR5X. The currently presented video cards of the GeForce RTX family are equipped with memory with an effective frequency of 14 GHz.

Combined with the increased memory frequency, this allows new products to have higher effective memory bandwidth, which is 448 GB / s for the RTX 2080/2070 at 256-bit bus width and an impressive 616 GB / s for the flagship RTX 2080 Ti at 352-bit. the width of the bus. Not forgotten are the new video memory compression algorithms, the efficiency of which is estimated to be up to 50% higher than that of the previous generation video cards.

As for the operation of several video cards in a bundle, there are some interesting changes here as well. First, classic SLI is virtually dead. The idea with a double bridge of high bandwidth, which debuted on the GeForce GTX 1080, did not receive development and popularization. Now the proprietary second-generation NVLink bus with high bandwidth is used to combine video cards. Its bandwidth is not even several times, but dozens of times higher than that of the classic SLI.

If one standard bridge offered a communication channel between video cards with a performance of about 1 GB per second, and presented in Pascal about 2.5 GB per second, then one NVLink port provides a bandwidth of 50 GB per second, which is 20 times more! And the older video card – GeForce RTX 2080 Ti, which has two NVLink channels – 100 GB per second. It’s so much more what the PCI Express bus provides and is comparable to the video memory bandwidth.

This feature is used in professional series video cards – Quadro, allowing them to combine video memory of several accelerators. Unfortunately, the ability to combine video memory is not available on GeForce series graphics cards, but in any case, the highest bandwidth NVLink allows you to use the new multi-GPU collaboration modes that appeared in DX12 and ensure efficient performance scaling for future high resolutions – 5K and 8K.

Secondly, now the ability to use multiple video cards has become the privilege of only senior chips and cards of the family. GeForce RTX 2070 and below video cards do not have an NVLink connector and, therefore, the ability to work in SLI. There is a certain logic in this. It makes sense to use a bunch of several video cards only when the performance of one is not enough, and there are no more productive models. Finally, bundles of 3 and 4 video cards, for which some restrictions were already introduced in the previous generation, have finally sunk into oblivion. Turing graphics cards only support SLI for two graphics cards.

Another, but not the last, interesting innovation of the new products is the emergence of a USB-C connector (3.1 Gen2), which provides four Display Port lines, a USB line and a power supply of up to 35 W. This combination of capabilities is aimed at future generations of VirtualLink virtual reality headsets. However, nothing prevents you from using this port for its intended purpose, to connect a monitor or USB devices.

Let’s summarize the theoretical part. The Turing architecture demonstrates that NVIDIA is not slowing down in development. Moreover, this is a simultaneous “offensive” on several fronts and an attempt to interest different categories of users. Performance gains are provided by architectural changes and a slight increase in the number of executive devices. Enthusiasts will be impressed by the increased performance and capabilities that NVLink offers to build the ultimate high-definition gaming PCs. The ability to accelerate the ray tracing algorithm promises a qualitative improvement in image quality, which can push to change the video card even for those users who are satisfied with the performance of the existing solution.

A strategic reserve has also been created, also in different directions. The vector of development of graphics in games is indicated with the transition to the use of a ray tracing algorithm, which will allow applying the increasing performance of future generations of video cards even in the most common monitor resolutions. Launched the use of neural networks and machine learning in games to improve image quality. Mesh shaders have been introduced to unload the central processor.

However, this medal also has a downside – complexity. The 12nm process technology used by Turing is a variation on the 16nm process technology that, as you recall, was used to make Pascal solutions. Consequently, no noticeable increase in density has occurred and all achievements must be “paid for” from the transistor budget, which, alas, is not infinite. Chips of the Turing family turned out to be unprecedentedly large for their positions. So the youngest, TU106, has an area of 445 mm2, which is 2.5 times more than that of the GP106 (200 mm2 – GeForce GTX 1060), 1.5 times more than that of the GP104 (314 mm2 – GeForce GTX 1080) and almost comparable to GP102 (471 mm2 – GeForce GTX 1080 Ti / Titan XP). Medium, TU104 – 545 mm2, comparable to or even better than the largest chips in NVIDIA history (G80 – 484 mm2, GT200 – 576 mm2, GF100 – 529 mm2, GK110 – 561 mm2, GM200 – 601 mm2). The senior chip of the current line, TU102 – 754 mm2, is currently the largest NVIDIA chip in the GeForce line, second only to the GV100 (Titan V).

It should also be remembered that the production of each square millimeter of area using new technical processes is more expensive than using the old ones. All this, combined with the quite natural desire of NVIDIA to make money, has led to the fact that the prices for new items can surprise even seasoned enthusiasts who are accustomed to buying senior video cards in the line. Are the new graphics cards and technologies worth the price they ask for? This is what we will find out in the practical part. that the production of each square millimeter of area using new technological processes is more expensive than using the old ones.

All this, combined with the quite natural desire of NVIDIA to make money, has led to the fact that the prices for new items can surprise even seasoned enthusiasts who are accustomed to buying senior video cards in the line. Are the new graphics cards and technologies worth the price they ask for? This is what we will find out in the practical part. that the production of each square millimeter of area using new technological processes is more expensive than using the old ones. All this, combined with the quite natural desire of NVIDIA to make money, has led to the fact that the prices for new items can surprise even seasoned enthusiasts who are accustomed to buying senior video cards in the line. Are the new graphics cards and technologies worth the price they ask for? This is what we will find out in the practical part.

Review of the NVIDIA GeForce RTX 2080 Founders Edition 8 GB videocard

Technical Specifications and Recommended Cost The technical characteristics and cost of the NVIDIA GeForce RTX 2080 video card are shown in the table in comparison with the reference versions of the NVIDIA GeForce RTX 2080 Ti, GeForce GTX 1080 Ti and GeForce GTX 1080. packaging and packaging The official version of the NVIDIA GeForce RTX 2080 Founders Edition comes in compact but very durable box made of thick cardboard. The packaging is designed in the proprietary NVIDIA color scheme and looks quite attractive.

There is practically no information on the box itself, apart from the name of the video card model and the manufacturer. However, on the basis of the box, you can still find the minimum system requirements and a link to the warranty, as well as the serial number and barcodes.

Inside the cardboard box there is an additional shell made of dense, but at the same time pliable material when pressed, which reliably protects the video card from damage.

A separate flat box contains a DisplayPort -> DVI adapter and instructions for installing a video card and drivers.

The video card is produced in China and is backed by a proprietary three-year warranty. Note that not all original models of NVIDIA partner companies are provided with the same warranty period, which will allow you to finish playing on NVIDIA GeForce RTX 2080 Founders Edition to the next generation of video cards without any problems. We add that the recommended cost of this model on the Russian market is 63,990 rubles. PCB design and features

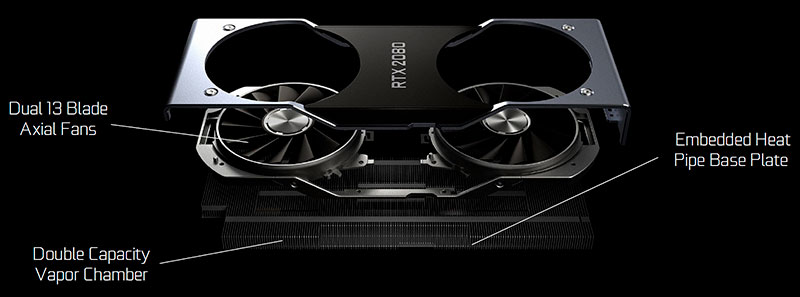

In its new reference models, NVIDIA has radically changed the design of the cooling system and the video card as a whole. Now, instead of direct-flow radial fans that push air through the radiator, a cooler is used with two axial fans that force air into a huge radiator. The model name of your graphics card is located in the center of the shroud and on the back of the protective plate.

Immediately I would like to note the perfect build quality and fit of all parts. No, don’t get us wrong – the reference GeForce GTX 1080 (Ti) never suffered in terms of quality, but now the new GeForce RTX 2080 (Ti) takes the build workflow to the next level.

Each component of this expensive “block” is made of either tactilely pleasing plastic or aluminum, and is flawlessly matched to each other. Unfortunately, this cannot be conveyed in the photo. The dimensions of the video card are 267 × 102 × 39 mm, and it weighs over a kilogram. Stickers with a barcode and a serial number are glued on the back of the video card in the video outputs area.

The latter, of course, is unique for each video card sample. On top of the video card, you can find two power connectors: a six- and eight-pin.

The Founders Edition’s claimed power consumption is 225 watts, while regular GeForce RTX 2080s should consume no more than 215 watts. The recommended power supply for a system with one such video card must be at least 650 watts. Noteworthy is the new NVLink 2.0 high-speed interface connector for combining a pair of video cards in SLI mode.

Once again, we recall that more than two GeForce RTX 2080 (Ti) can no longer be combined, and the RTX 2070 does not support work in an SLI bundle at all. DVI disappeared from video outputs. Instead, users are offered one HDMI version 2.0b and three Display Port versions 1.4a.

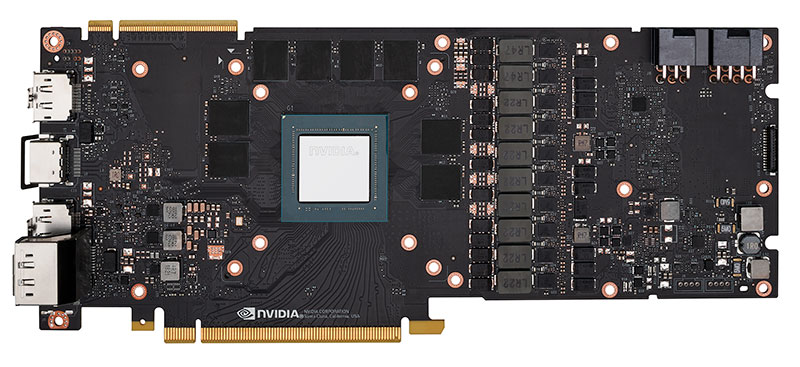

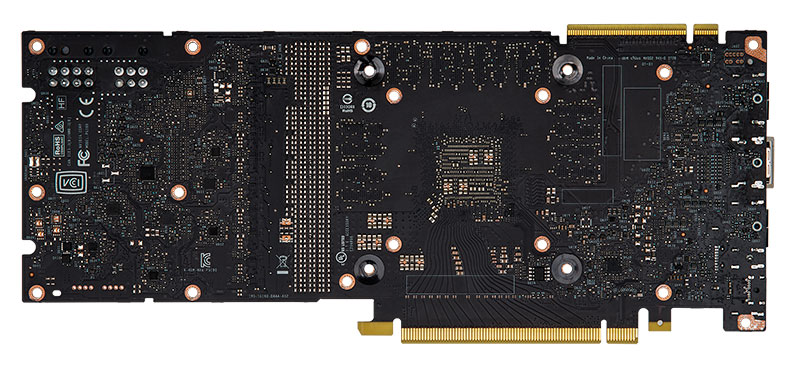

In addition, USB 3.1 Gen2 has appeared, which can be used both for its intended purpose and for connecting virtual reality devices. Note the perforation of the video outputs panel, although the new design of the cooling system in this aspect is no longer as demanding as the previous one. Let’s move on to the motherboard and immediately say that we will use the photo from the official NVIDIA website, since we were not recommended to disassemble the video card. Perhaps in the following materials we will return to this point, but for now let’s look at the rather high-quality photos of the NVIDIA GeForce RTX 2080 Founders Edition printed circuit board.

Despite the fact that the NVIDIA website indicates the use of a twelve-phase power system in the GeForce RTX 2080 Founders Edition, we counted only ten phases, of which eight phases are allocated directly to the graphics processor and two more – to video memory and power circuits. The phases are composed of iMON DrMOS assemblies and are controlled by the uPI uP9512 controller .

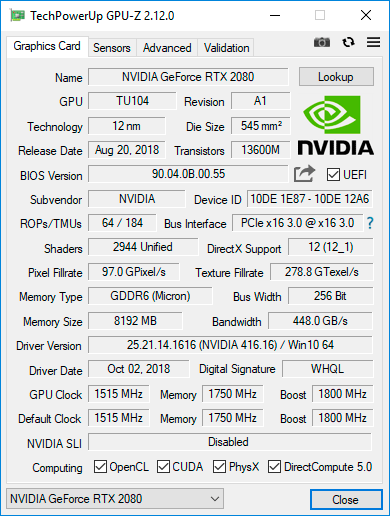

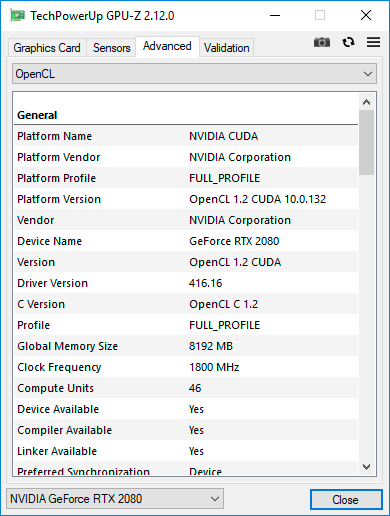

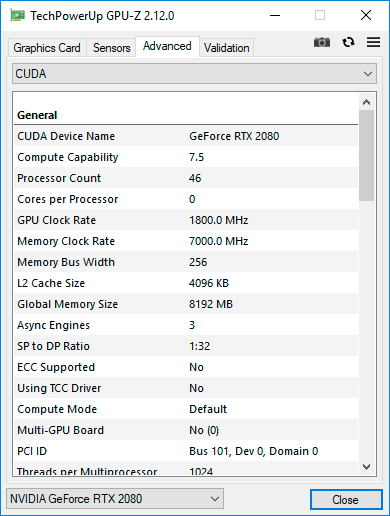

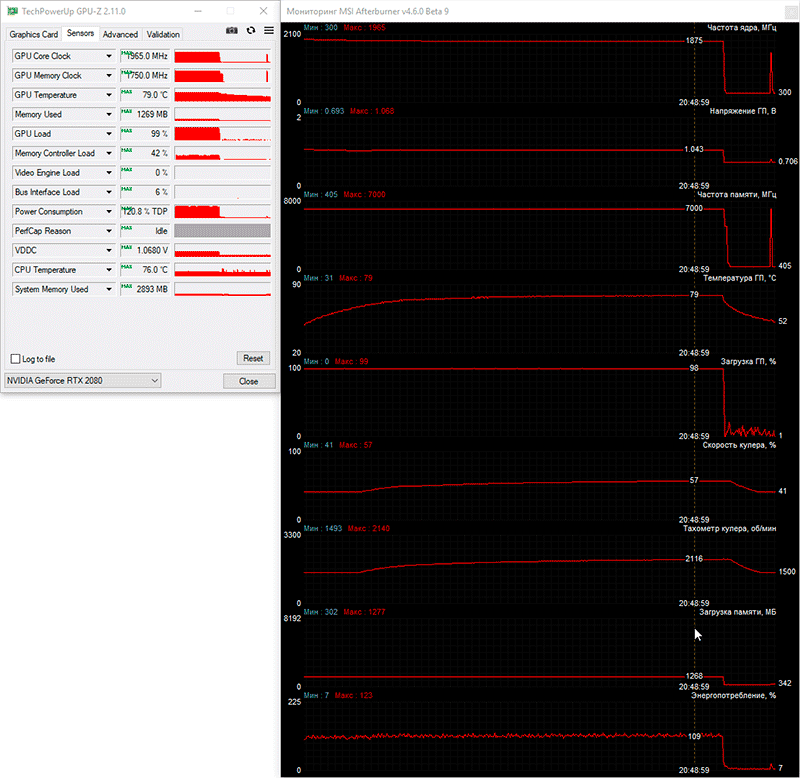

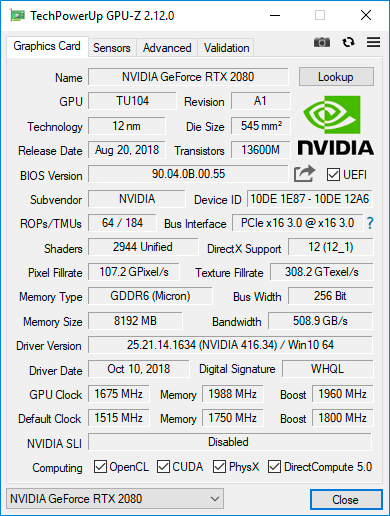

The GeForce RTX 2080 is based on the Turing architecture TU104 GPU, released in accordance with the 12nm FinFET process. The die area is 545 mm2, and the approximate number of transistors is 13.6 billion. The base frequency of the GPU in 3D mode is declared at around 1515 MHz, and in the boosted mode it can rise to 1800 MHz. At the same time, according to monitoring data, the GPU frequency of our video card briefly increased to 1965 MHz, and then stabilized at 1875 MHz and did not drop below in 3D. When switching to 2D mode, the frequency of the GPU is reduced to 300 MHz at the same time as the voltage drops from 1.043 V to 0.706 V, which may differ on each video card.

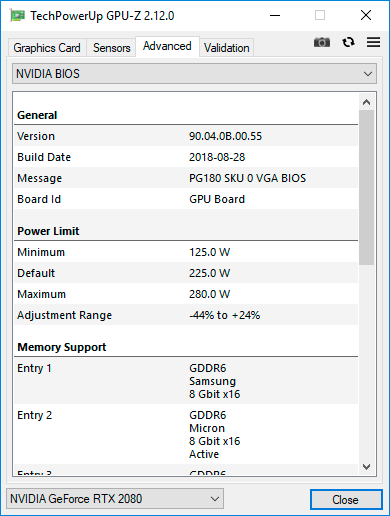

NVIDIA GeForce RTX 2080 Founders Edition is equipped with eight gigabytes of GDDR6 memory, which are packed on the video card with Micron chips with a theoretical effective frequency of 14 GHz. With a 256-bit bus width, such memory is capable of providing a record bandwidth for such a bus, up to 448.3 GB / s. We add that in 2D mode, the memory frequency is reduced to 810 MHz, just like on GeForce GTX 1080 video cards. We will present the characteristics of NVIDIA GeForce RTX 2080 Founders Edition using the GPU-Z utility.

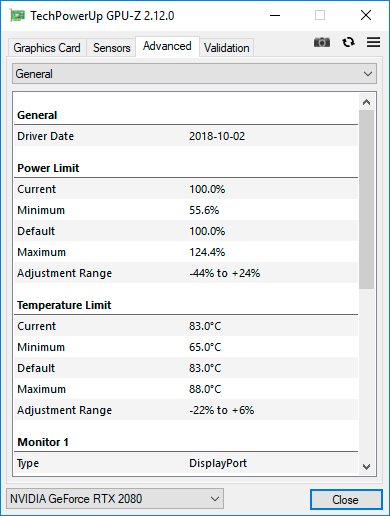

Judging by the BIOS data of the video card (version 90.04.0B.00.55 dated August 28, 2018), the maximum power limit for the reference version is 280 watts, and the maximum GPU temperature is 88 degrees Celsius. cooling system The reference versions of the NVIDIA GeForce RTX 2080 Ti and GeForce RTX 2080 are equipped with a completely new air cooling system. Moreover, if earlier such video cards were not purchased, also because the reference coolers were noisy and coped with their task rather mediocre, now NVIDIA partners will have to try to create something equally effective and quiet within the framework of the two-slot design.

The cooler consists of a huge aluminum radiator, a metal frame with fans and a casing covering the radiator.

A large-area evaporation chamber is built into the base of the radiator, and the intercostal distance is barely more than 1 mm.

The result is, perhaps, a record heatsink in terms of dissipation area, and at the same time the developers of this cooling system managed not to go beyond two slots. The entire structure is cooled by two thirteen-blade fan impellers with a diameter of 85 mm.

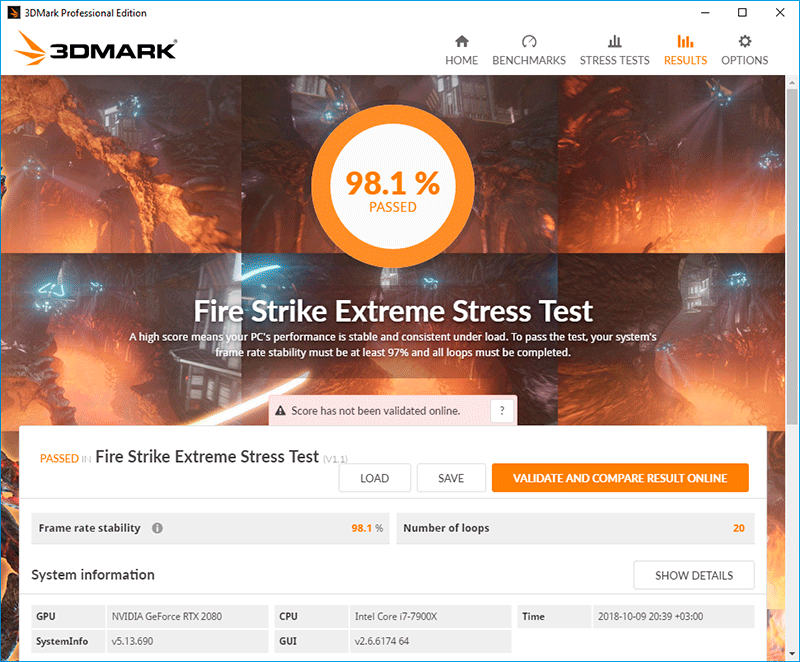

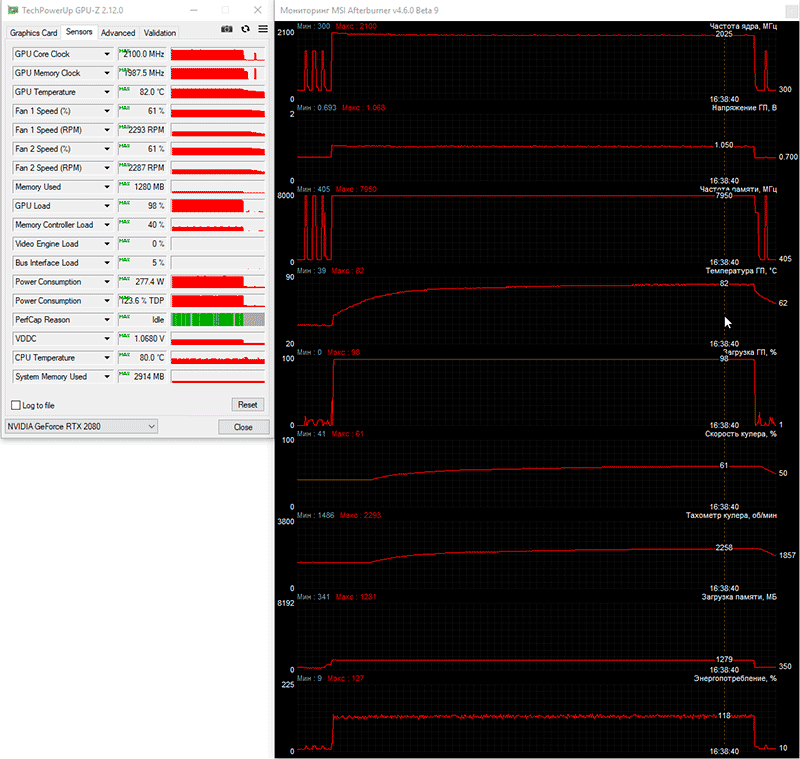

The air flow from them is thrown up and down the video card and, to a small extent, through the perforations in the video output panel. The fan speed is regulated by adaptive pulse-width modulation and, according to monitoring data, can vary in the range from 1490 to 3730 rpm, but even under the most serious load, the fans do not spin up to the upper limit, as we will now see. To check the temperature regime of the video card as a load, we used nineteen cycles of the Fire Strike Extreme stress test from the 3DMark graphics package.

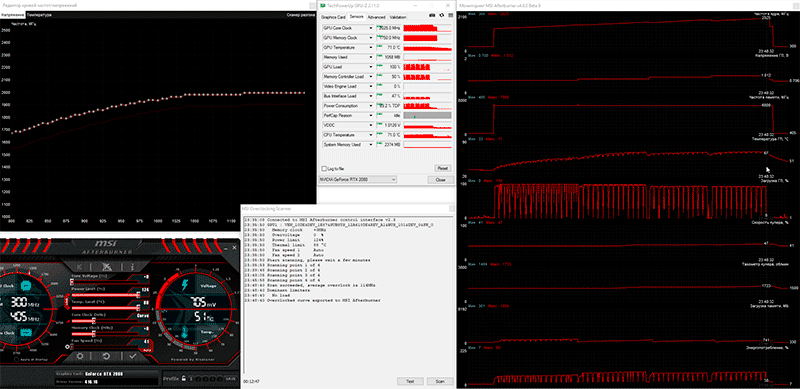

To monitor temperatures and all other parameters, MSI Afterburner version 4.6.0 beta 9 and GPU-Z utility version 2.11.0 and newer were used. The tests were carried out in a closed case of the system unit, the configuration of which you can see in the next section of the article, at a room temperature of about 25 degrees Celsius. Let’s see how the new NVIDIA GeForce RTX 2080 Founders Edition cooler cools the graphics card in automatic fan mode.

Automatic mode (1490-2140 rpm)

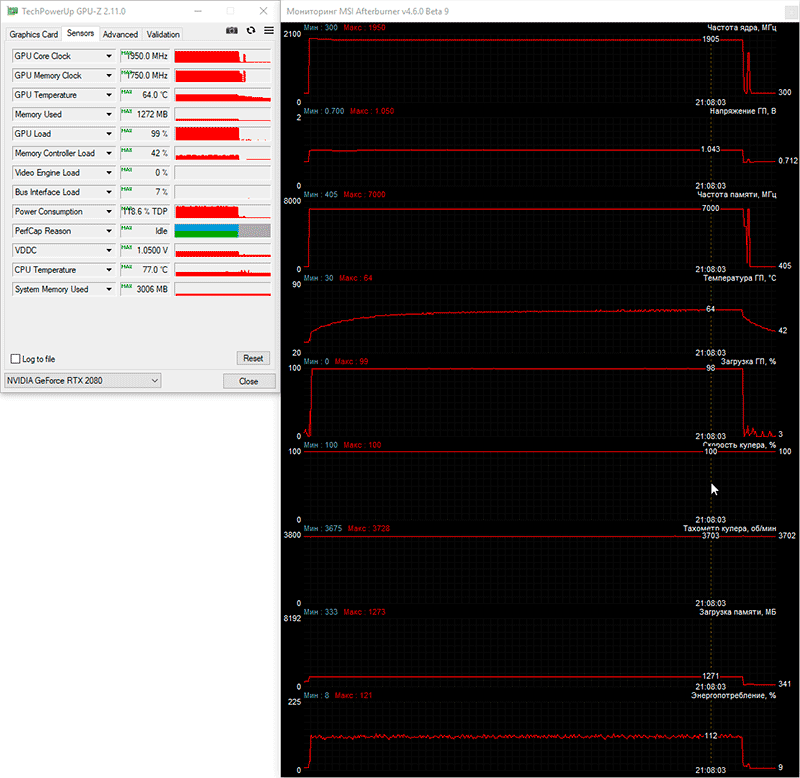

Well, we can say that everything is very cool for a reference video card! The GPU temperature reached 79 degrees Celsius and did not rise above, and its frequency stabilized at 1875 MHz. But most importantly, the fan speed increased only by 650 rpm, which did not take the GeForce RTX 2080 Founders Edition out of the noise level comfort mode. In 2D, the video card is not audible at all. If the fans of the video card are manually set to maximum speed, then the maximum temperature of the GPU is immediately reduced by 15 degrees Celsius and is limited to 64 degrees Celsius.

Maximum speed (~ 3730 rpm)

At the same time, the stable frequency of the GPU increased to 1905 MHz, and this, for a minute, only on the reference video card! To understand this achievement, NVIDIA needs to remind our readers that the reference NVIDIA GeForce GTX 1080 at the maximum turbine speed (4000 rpm) at a lower room temperature warmed up to 68 degrees Celsius , and its GPU worked at 1823 MHz. And this is without taking into account the difference in performance and technologies GP104 Pascal and TU104 Turing. Let us summarize that, in our opinion, in terms of the cooling system, NVIDIA has made a very serious step forward. Overclocking potential

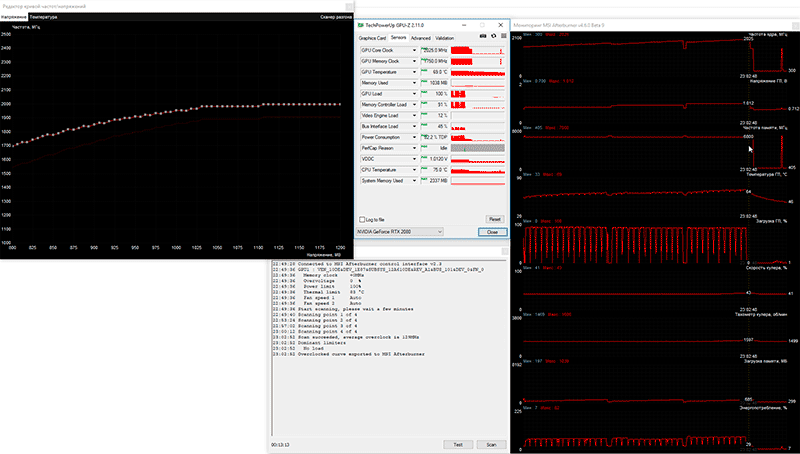

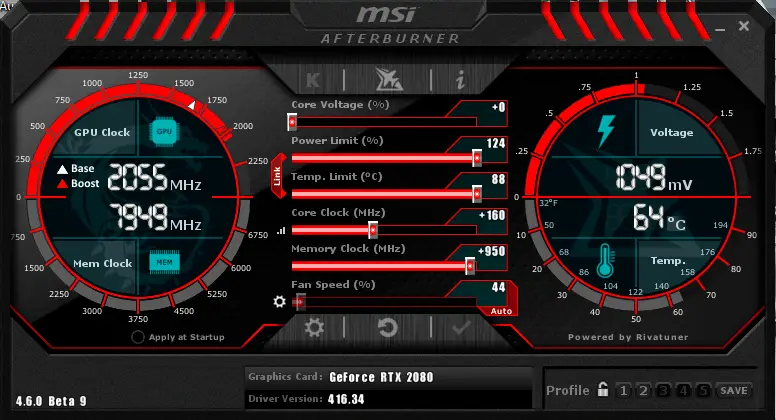

The new NVIDIA GeForce RTX 2080 Ti and GeForce RTX 2080 support the fourth version of the GPU Boost 4.0 dynamic frequency control technology, the main innovation of which, in our opinion, is automatic overclocking. It is supported by the proprietary utilities of the original video card manufacturers, as well as the irreplaceable MSI Afterburner of the latest version. To do this, in the program, you need to press the key combination Ctrl + F, select the OC scanner and click Scan. For about 15-20 minutes, the scanner raises the GPU frequency step by step and tests for stability and errors, and no user participation is required. As a result, the maximum GPU frequency will be determined, at which the video card does not lose stability.

We conducted the first such test at the nominal power and temperature limits for our NVIDIA GeForce RTX 2080 Founders Edition, and here are the results.

As you can see, the frequency of the graphics processor increased by 129 MHz at the final 2025 MHz, not bad, but still it should be noted that the scanner heats up the video card noticeably weaker than the same 3DMark.

Now let’s try to increase the power and temperature limits to the maximum (124 and 88, respectively), and start the scanner again, and we got even less gain – only 114 MHz.

It is obvious that the automatic overclocking technology at the moment cannot be called ideal, but nevertheless for most ordinary users it will greatly facilitate the overclocking process, although the video memory will still have to be overclocked on their own. We moved on to the manual search for the maximum frequencies of the video card.

After a couple of hours of tests, it was possible to determine that our NVIDIA GeForce RTX 2080 Founders Edition is capable of overclocking at 160 MHz (+ 10.6%) in the core and at 1900 MHz (+ 13.6%) in video memory.

A very good overclocking result for the reference video card, since the final frequencies were 1675-1960 / 15904 MHz.

After overclocking, the peak frequency of the graphics processor of the video card reached 2100 MHz, but during testing it stabilized at 2025 MHz.

Automatic mode (1490-2290 rpm)

The GPU temperature of the overclocked video card increased to 82 degrees Celsius, and the fan speed during automatic regulation increased by 150 rpm. In the process of testing the video card in games, we had to slightly lower the frequency of the GPU and the video card, so the final overclocking result is 1670-1983 / 15680 MHz. Let’s move on to the test configuration and methodology, and then to the test results.

Test configuration, tools and testing methodology

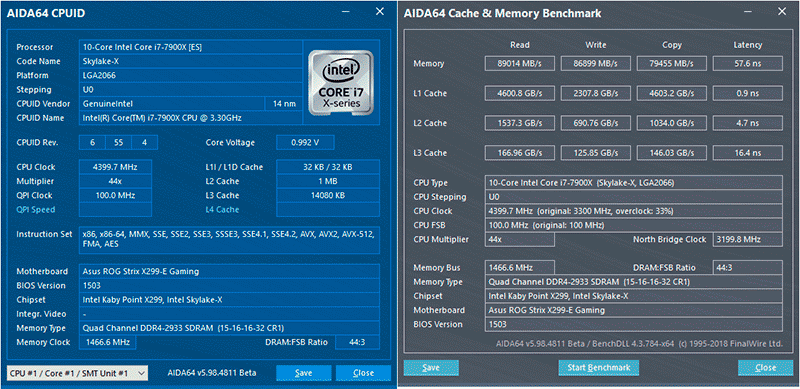

The video cards were tested in a closed system case on the following hardware configuration: motherboard: ASUS ROG Strix X299-E Gaming (Intel X299 Express, LGA2066, BIOS 1503 dated 08/31/2018); central processor: Intel Core i9-7900X 3.3-4.5 GHz (Skylake-X, 14 nm, U0, 10 × 1024 KB L2, 13.75 MB L3, TDP 140 W); CPU cooling system: Phanteks PH-TC14PЕ (2 × Corsair AF140, 760 ~ 1090 rpm); thermal interface: ARCTIC MX-4 (8.5 W / (m × K); RAM: DDR4 4 × 4 GB Corsair Vengeance LPX 2800 MHz (CMK16GX4M4A2800C16) (XMP 2800 MHz / 16-18-18-36_2T / 1.2 V or 3000 MHz / 16-18-18-36_2T / 1.35 V); video cards:

NVIDIA GeForce RTX 2080 Founders Edition 8 GB / 256 bit, 1515-1800 (1965) / 14000 MHz and overclocked to 1670-1983 (2100) / 15680 MHz;

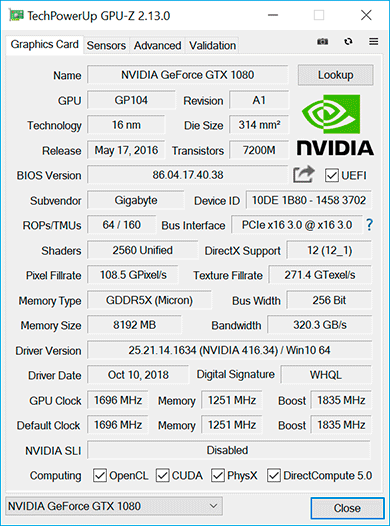

Gigabyte GeForce GTX 1080 G1 Gaming (GV-N1080G1 GAMING-8GD) 8 GB / 256 bit, 1696-1835 (1936) / 10008 MHz and overclocked to 1786-1925 (2025) / 11312 MHz;

drives:

for the system and benchmarks: Intel SSD 730 480 GB (SATA III, BIOS vL2010400);

for games and benchmarks: Western Digital VelociRaptor 300 GB (SATA II, 10,000 rpm, 16 MB, NCQ);

archived: Samsung Ecogreen F4 HD204UI 2 TB (SATA II, 5400 rpm, 32 MB, NCQ);

sound card: Auzen X-Fi HomeTheater HD;

case: Thermaltake Core X71 (six be quiet! Silent Wings 2 (BL063) at 900 rpm: three for blowing in, three for blowing out);

control and monitoring panel: Zalman ZM-MFC3;

PSU: Corsair AX1500i Digital ATX (1.5 kW, 80 Plus Titanium), 140 mm fan;

monitor: 27-inch ASUS ROG Swift PG27UQ (DisplayPort, 3840 × 2160, 144 Hz). A small digression about the monitor used in today’s tests. Here is what the manufacturer writes about it:

“The ASUS ROG Swift PG27UQ is a 27-inch 4K / Ultra-HD IPS gaming monitor with up to 144Hz refresh rate. With quantum dot technology, it has an extended color gamut (DCI-P3) and HDR support means increased contrast, so this monitor delivers incredibly lifelike images with rich colors. A built-in ambient light sensor is available to automatically adjust the screen brightness to suit the environment. The look of the device can be personalized with Aura sync lighting and built-in projection elements. ASUS ROG Swift PG27UQ is the technology of the future, available now! “.

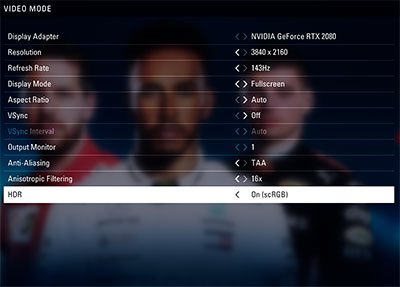

However, no official descriptions can replace personal impressions, and they turned out to be very rich and vivid, despite my previously rather neutral attitude to 4K. The temporary replacement of the test Samsung S27A850D (2560 × 1440) with the ASUS ROG Swift PG27UQ and the transition to 4K resolution radically changed this very personal impression, as well as the very perception of the picture. The image has become so detailed and detailed that all the games in the test suite literally changed. Smoothness at 144 Hz is outrageous, if the performance of the video card is enough (and it is not enough everywhere, I must admit), then you get great pleasure from the gameplay, fully understanding the term “immersion in the game”. And now I am not exaggerating and I am not advertising anything, understand correctly – this is actually a description of personal impressions, nothing more. At the same time, it must be said that scaling of individual 2D applications in 4K resolution does not always work correctly, and the fonts are not clear. In addition, the work of the HDR technology still hits the eyes, and it is not easy to play in this mode for a long time, you need to get used to.

To reduce the dependence of the performance of video cards on the platform speed, a 14-nm ten-core processor with a multiplier of 44, a reference frequency of 100 MHz and the Load-Line Calibration function activated to the fourth level was overclocked to 4.4 GHz when the voltage in the motherboard BIOS was raised to 1.113 V.

At the same time, 16 gigabytes of DDR4 RAM operated in four-channel mode at a frequency of 2.933 GHz with timings of 15-16-16-32 CR1 at a voltage of 1.31 V. The performance of the NVIDIA GeForce RTX 2080 Founders Edition is comparable to the original version of the Gigabyte GeForce GTX 1080 G1 Gaming. at its nominal frequencies, which give it a 3-5% advantage over the reference version NVIDIA GeForce GTX 1080, and during overclocking.

Unfortunately, we cannot compare the new product in the GeForce GTX 1080 Ti, due to the lack of such a video card model. We add that the power and temperature limits on both video cards were increased to the maximum possible, and in the GeForce drivers, instead of optimal power consumption, the priority was set to maximum performance. Testing, which began on October 15, 2018, was conducted under the operating system Microsoft Windows 10 Pro (1803 17134.345) with all updates as of the specified date and with the installation of the following drivers: motherboard chipset Intel Chipset Drivers – 10.1.17809.8096 WHQL dated 10/12/2018 ; Intel Management Engine Interface (MEI) – 12.0.1162 WHQL from 10/15/2018;

drivers for video cards on NVIDIA GPUs – GeForce 416.34 WHQL from 10/11/2018.

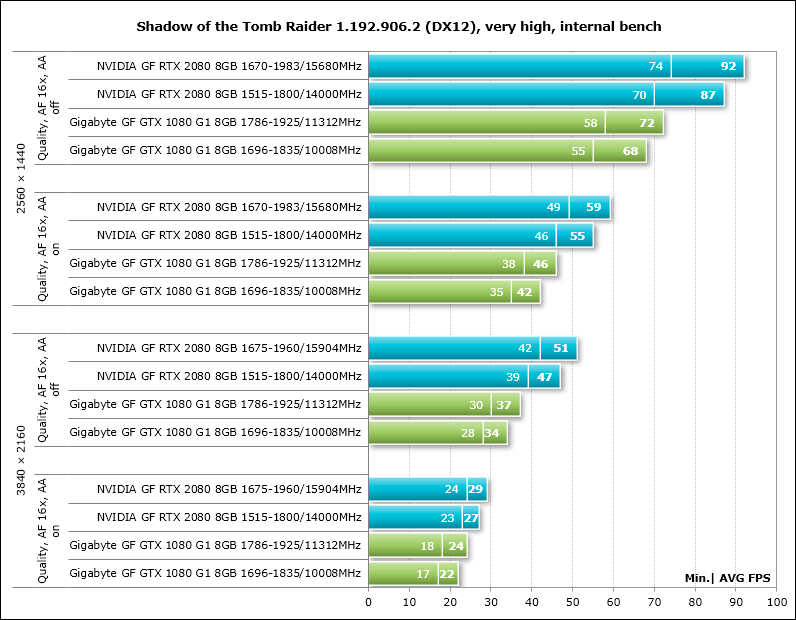

In today’s testing, we used 2560 × 1440 and 3840 × 2160 (4K) pixels. Two graphics quality modes were used for the tests: Quality + AF16x – texture quality in the drivers by default with anisotropic filtering at 16x and Quality + AF16x + MSAA 4x with anisotropic filtering at 16x and full-screen anti-aliasing using the MSAA algorithm of degree 4x. In some games, due to the specifics of their game engines, other anti-aliasing algorithms were used, which will be indicated later in the methodology and directly on the diagrams with the results. Anisotropic filtering and full-screen anti-aliasing was enabled in the game settings. If these settings were absent in games, then the parameters were changed in the control panel of the GeForce drivers. V-Sync was also forcibly disabled there.

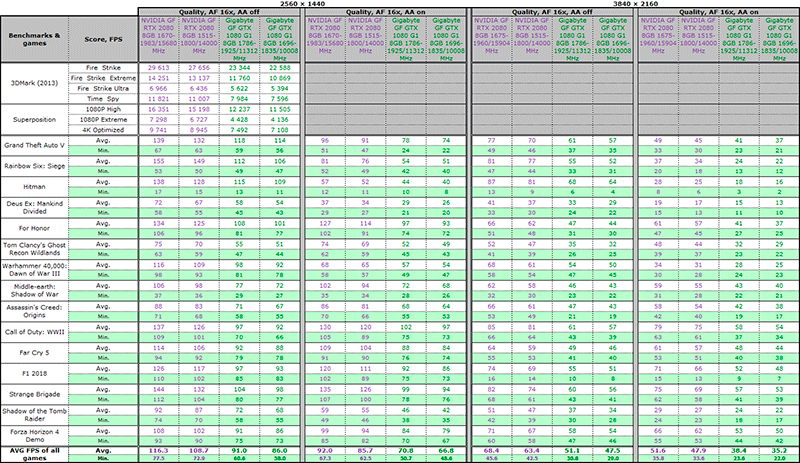

The video cards were tested in two graphics tests and in fifteen games, updated to the latest versions as of the date of the start of the preparation of the material (this time updates were installed on all games without exception). Compared to our previous article on video cards, the test set has received major updates. First of all, we excluded the old Metro: Last Light, Rise of the Tomb Raider and F1 2017, as well as the World of Tanks enCore test, which is not resource-intensive for the video cards tested today. In return, the new F1 2018, Strange Brigade, Shadow of the Tomb Raider and Forza Horizon 4 Demo were included. Now the list of test applications looks like this (games and further test results in them are arranged in the order of their official release):

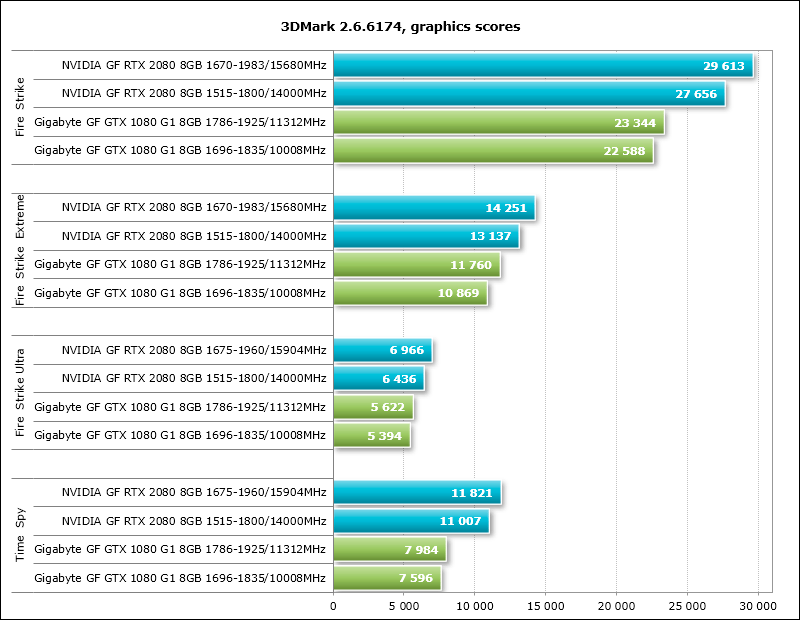

3DMark (DirectX 9/11/12) – version 2.6.6174, tested in the Fire Strike, Fire Strike Extreme, Fire Strike Ultra and Time Spy scenes (the graph shows the graphical score);

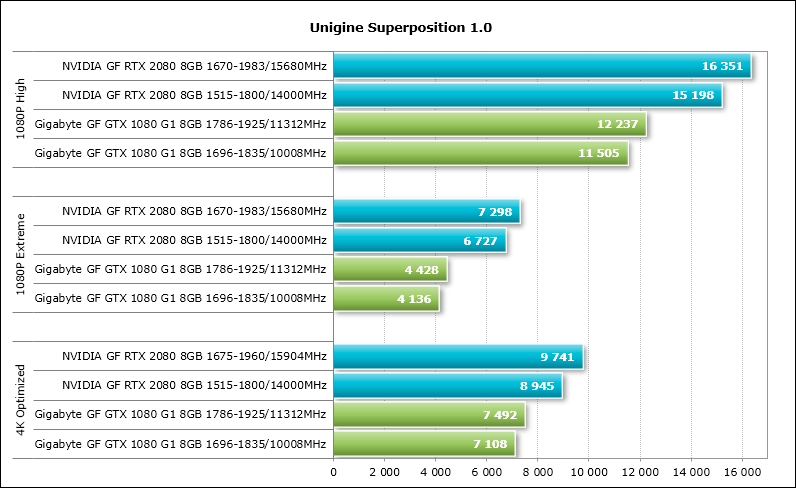

Unigine Superposition (DirectX 11) – version 1.0, tested in 1080P High, 1080P Extreme and 4K Optimized settings;

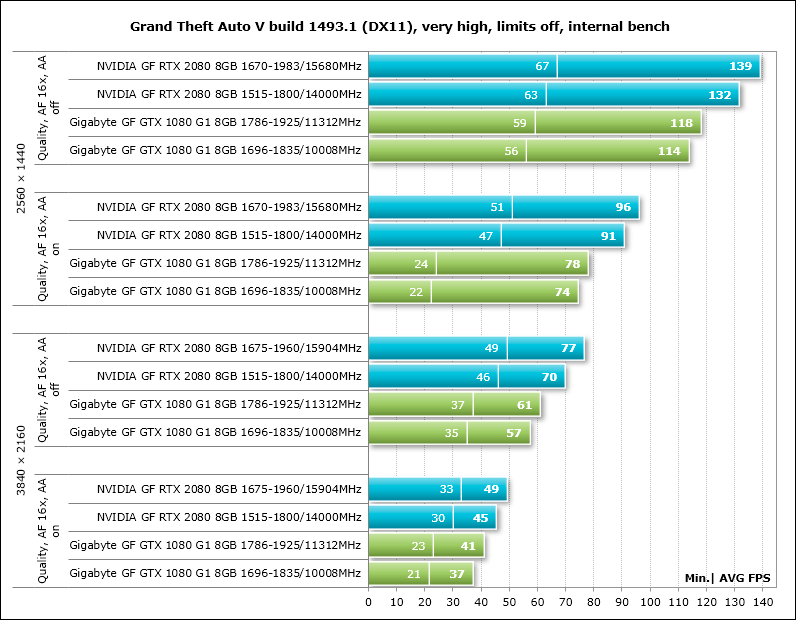

Grand Theft Auto V (DirectX 11) – build 1493.1, quality settings at Very High, ignoring suggested restrictions enabled, V-Sync disabled, FXAA enabled, NVIDIA TXAA enabled, MSAA for reflections enabled, NVIDIA soft shadows;

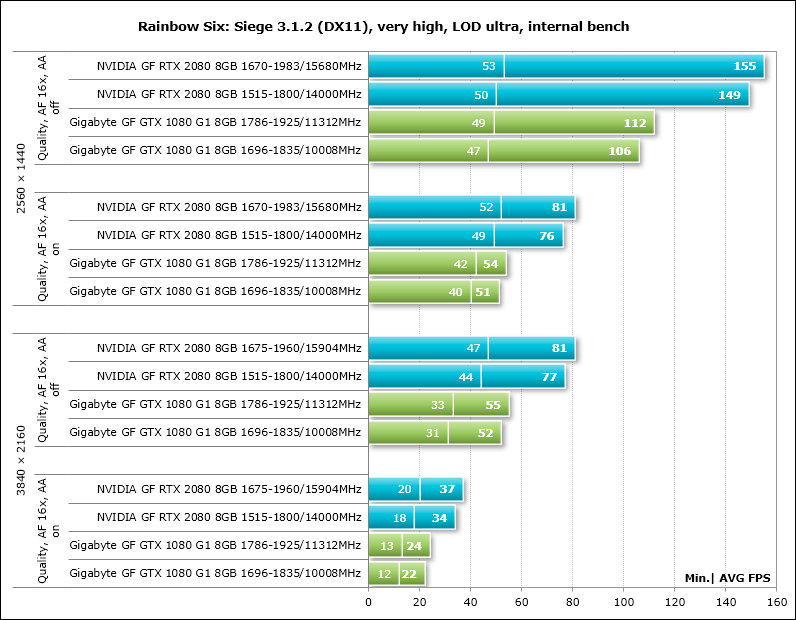

Tom Clancy’s Rainbow Six: Siege (DirectX 11) – version 3.1.2, texture quality settings at the Very High level, Texture Filtering – Anisotropic 16X and other maximum quality settings, tests with MSAA 4x and without anti-aliasing, double sequential run of the test built into the game ;

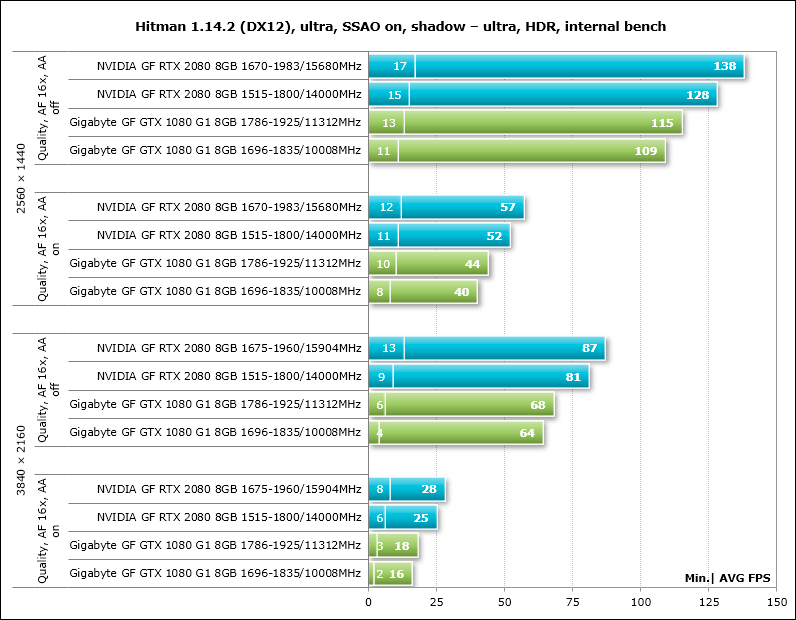

Hitman (DirectX 12) – version 1.14.2, built-in test with graphics quality settings at the “Ultra” level with HDR, SSAO enabled, shadow quality “Ultra”, memory protection disabled;

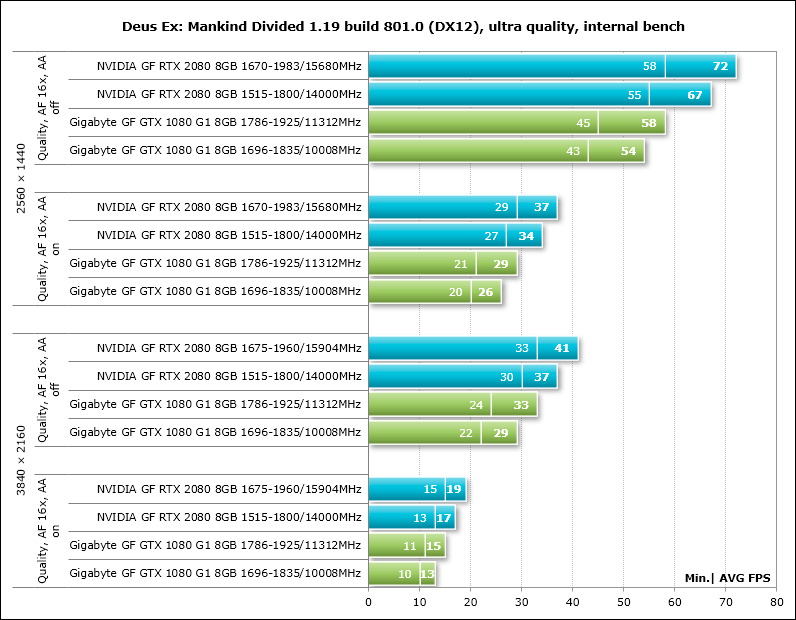

Deus Ex: Mankind Divided (DirectX 12) – version 1.19 build 801.0, all quality settings are manually set to the maximum level, tessellation and depth of field are activated, at least two consecutive runs of the benchmark built into the game;

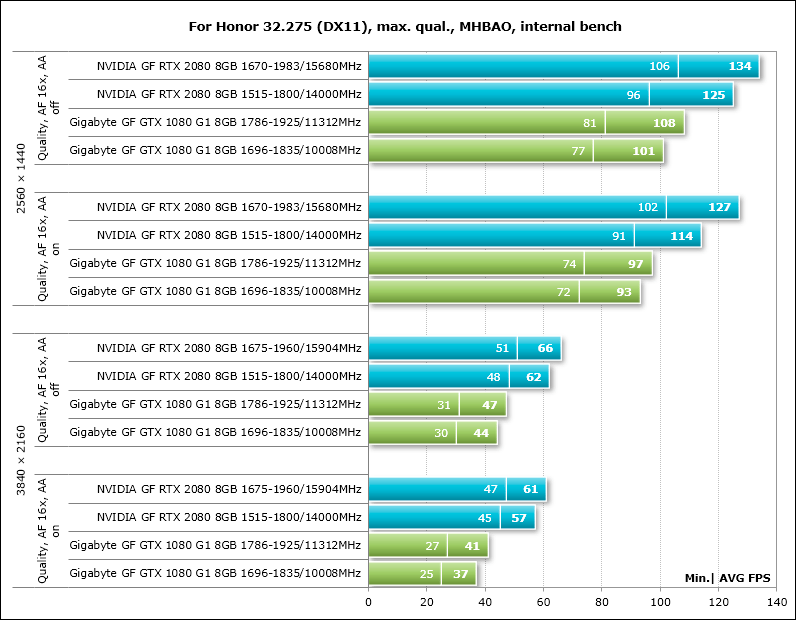

For Honor (DirectX 11) – version 32.275, maximum graphics quality settings, volumetric lighting – MHBAO, dynamic reflections and blur effect enabled, anti-aliasing oversampling disabled, tests without anti-aliasing and with TAA, double sequential run of the benchmark built into the game;

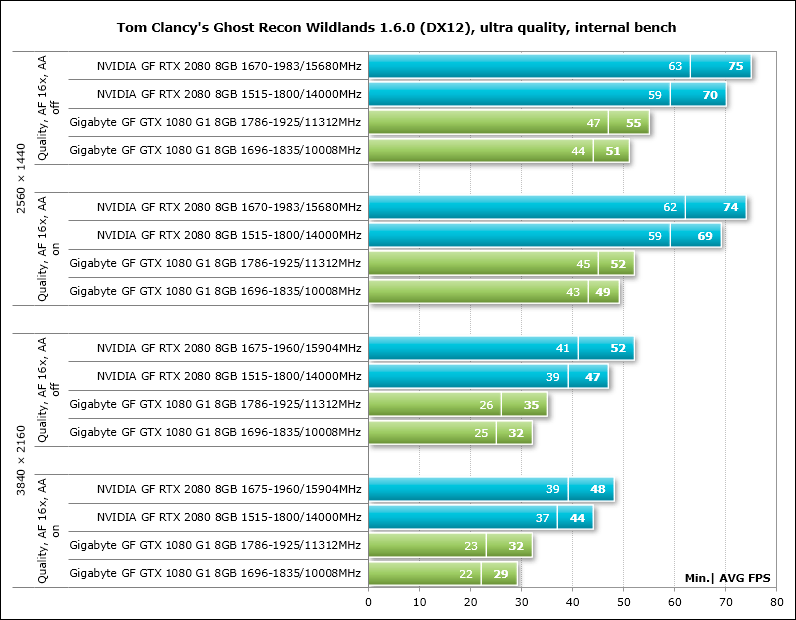

Tom Clancy’s Ghost Recon Wildlands (DirectX 12) – version 1.6.0, graphics quality settings to maximum or Ultra-level, all options are activated, tests without anti-aliasing and with SMAA + FXAA, double sequential run of the benchmark built into the game;

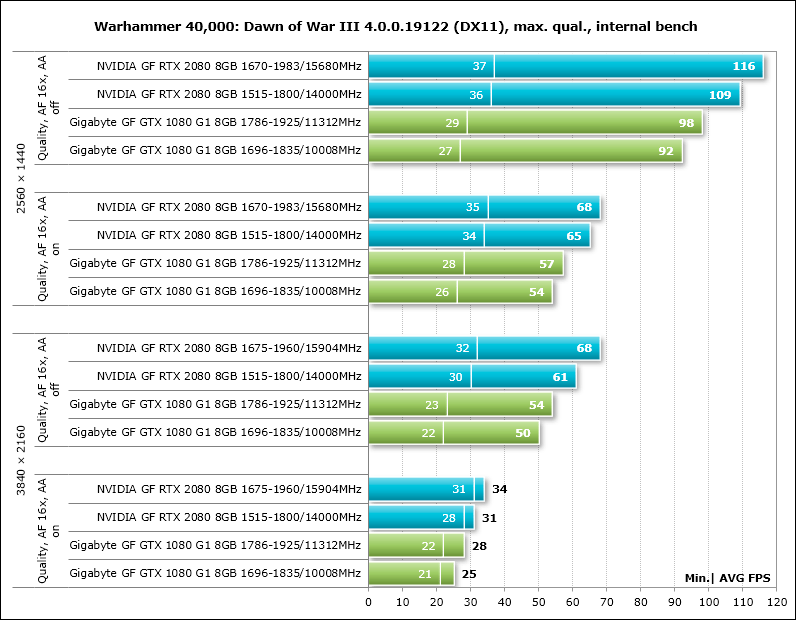

Warhammer 40,000: Dawn of War III (DirectX 11) – version 4.0.0.19122, all graphics quality settings to the maximum level, anti-aliasing is activated, but in AA mode, resolution scaling up to 150% was used, a double sequential run of the benchmark built into the game;

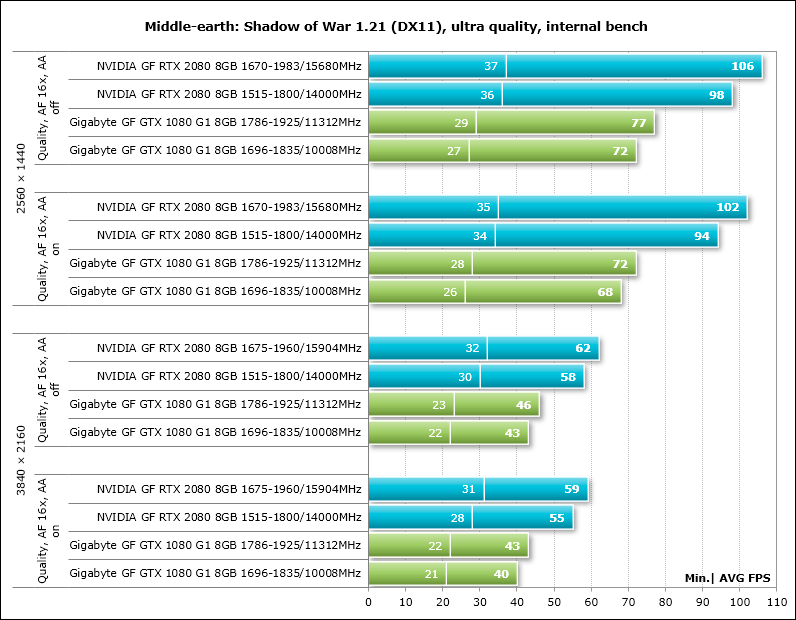

Middle-earth: Shadow of War (DirectX 11.1) – version 1.21, all graphics quality settings to “Ultra”, depth of field and tessellation are activated, double sequential run of the test built into the game without anti-aliasing and with TAA;

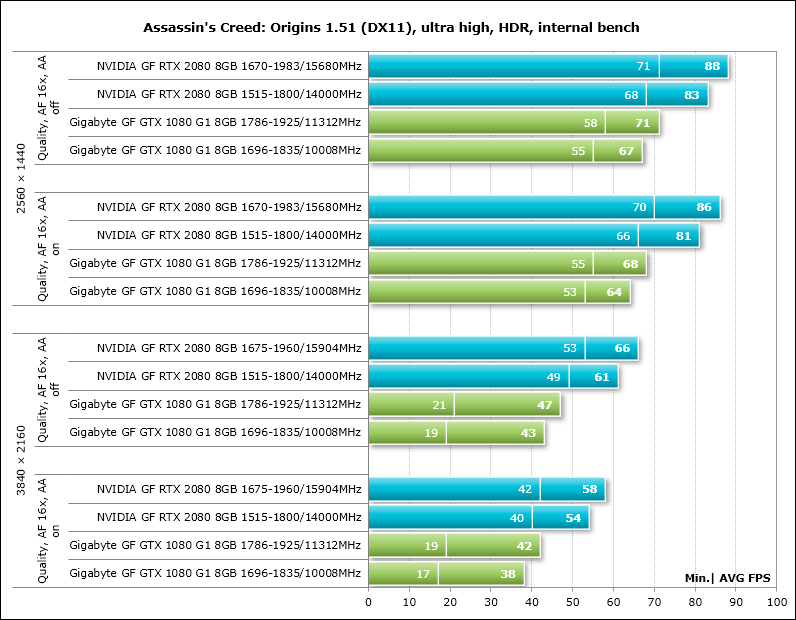

Assassin’s Creed: Origins (DirectX 11) – version 1.51, the built-in game benchmark was used with the Ultra High settings profile and dynamic resolution disabled, HDR enabled, double test run without anti-aliasing and with anti-aliasing at High;

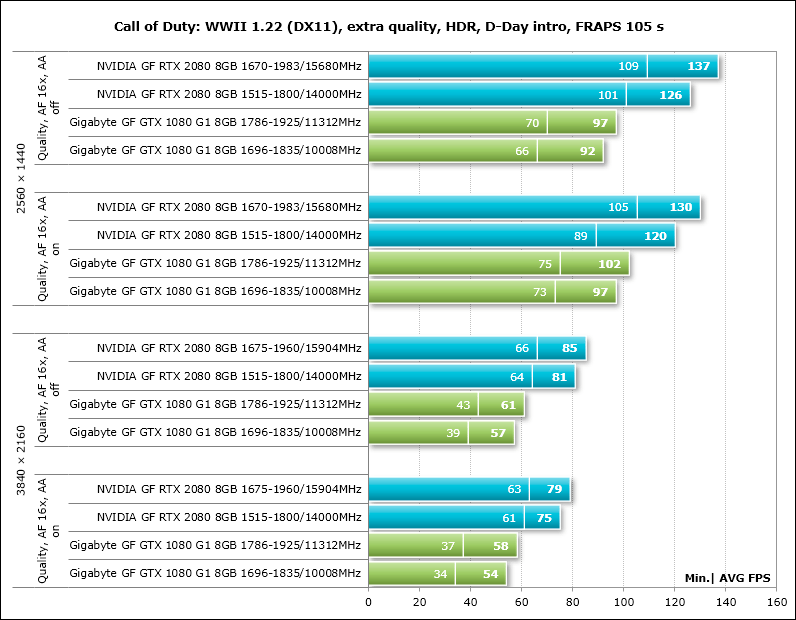

Call of Duty: WWII (DirectX 11) – version 1.22, all graphics quality settings at extra-level with HDR, shadows are enabled, testing without anti-aliasing and when the SMAA T2X option is activated, a double run of the opening scene of the first stage of D-Day was used, FRAPS 105 seconds;

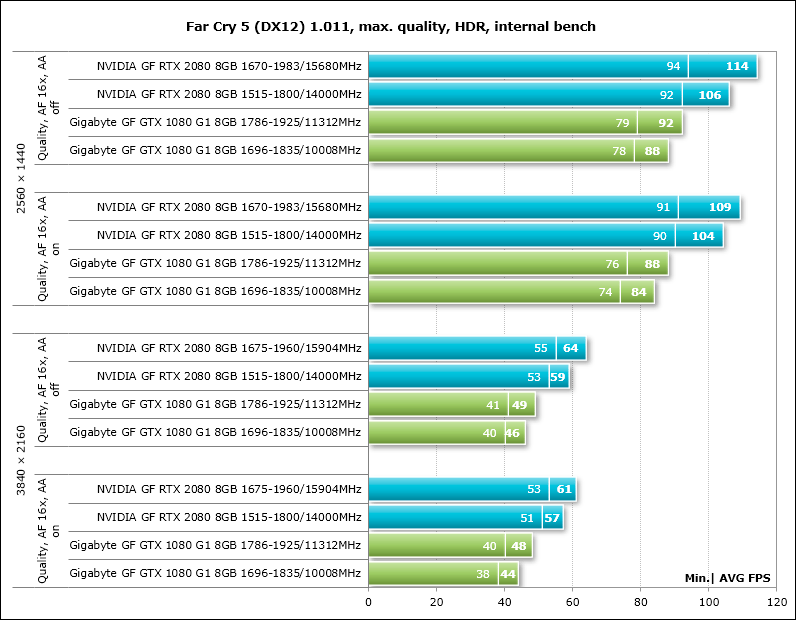

Far Cry 5 (DirectX 12) – version 1.011, maximum quality level with HDR enabled, volumetric fog and shadows at maximum, motion blur enabled, built-in performance test without anti-aliasing and with TAA enabled;

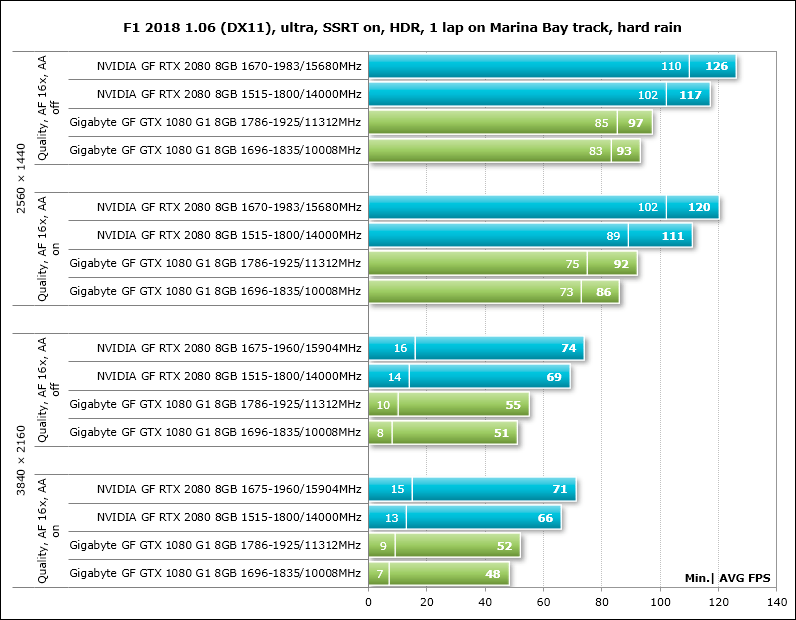

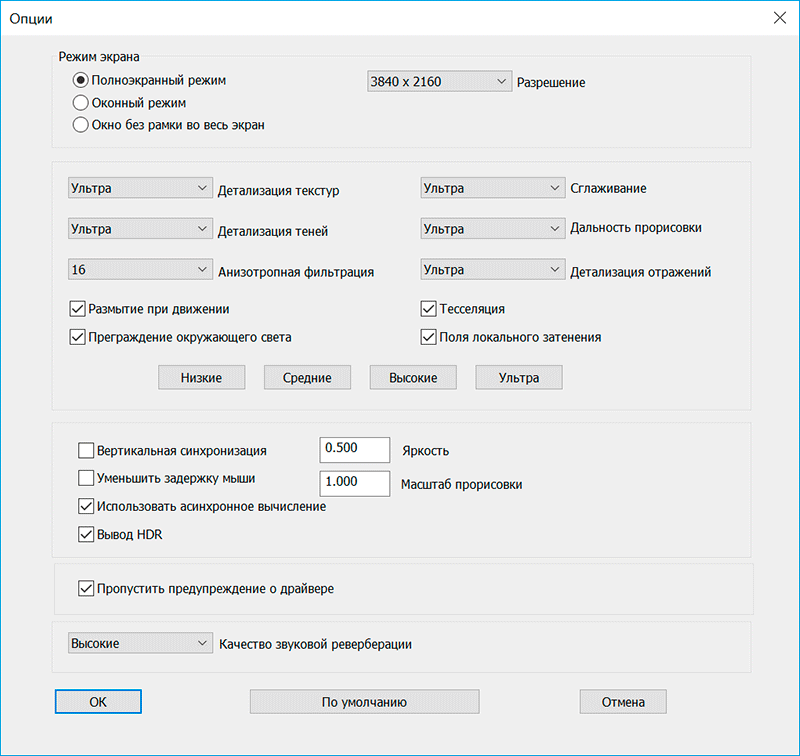

F1 2018 (DirectX 11) – version 1.06, the in-game test was used on the Marina Bay track in Singapore during a heavy rainfall, the graphics quality settings were set to the maximum level for all items with HDR activated, SSRT shadows activated, tests with TAA and without anti-aliasing ;

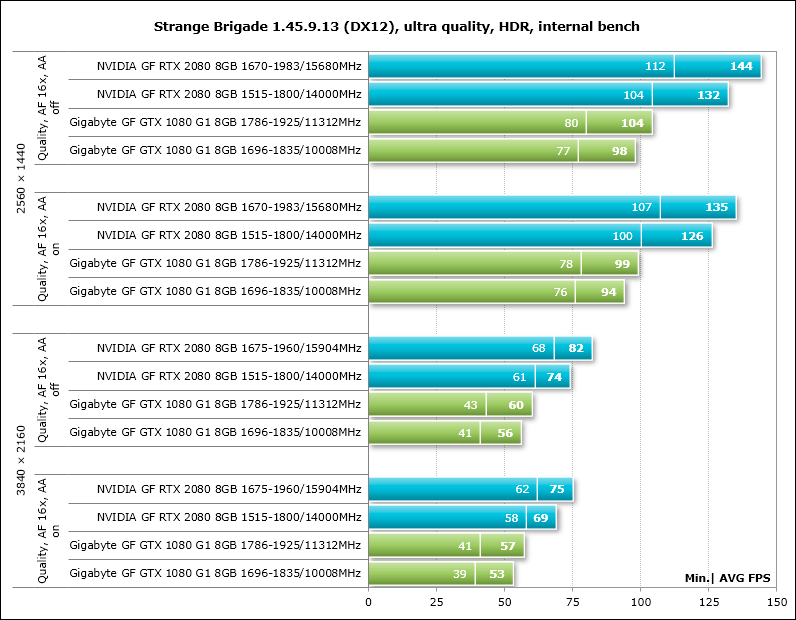

Strange Brigade (DirectX 12) – version 1.45.9.13, setting level “Ultra”, HDR and other quality improvement techniques enabled, asynchronous computation enabled, two consecutive test cycles of the built-in benchmark without anti-aliasing and with its activation;

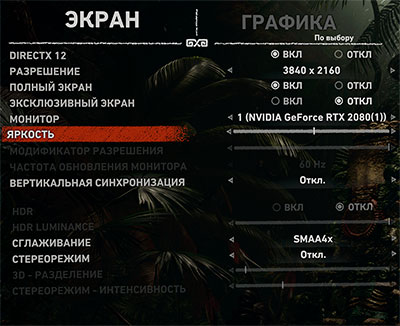

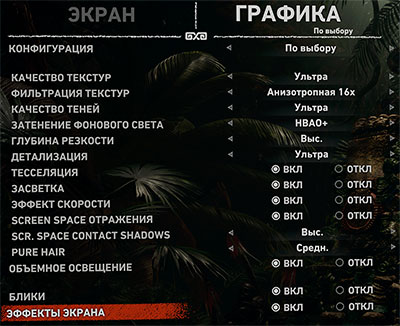

Shadow of the Tomb Raider (DirectX 12) – version 1.0 build 235.5, all parameters to the Ultra level, Ambient Occlusion – HBAO +, tessellation and other quality improvement techniques are activated, two test cycles of the built-in benchmark without anti-aliasing and with SMAA4x activation;

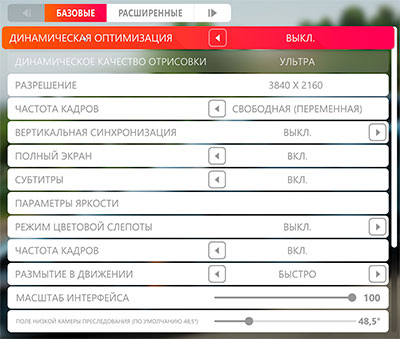

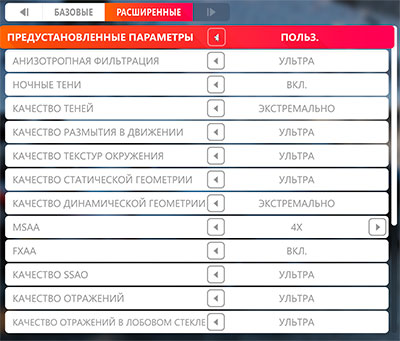

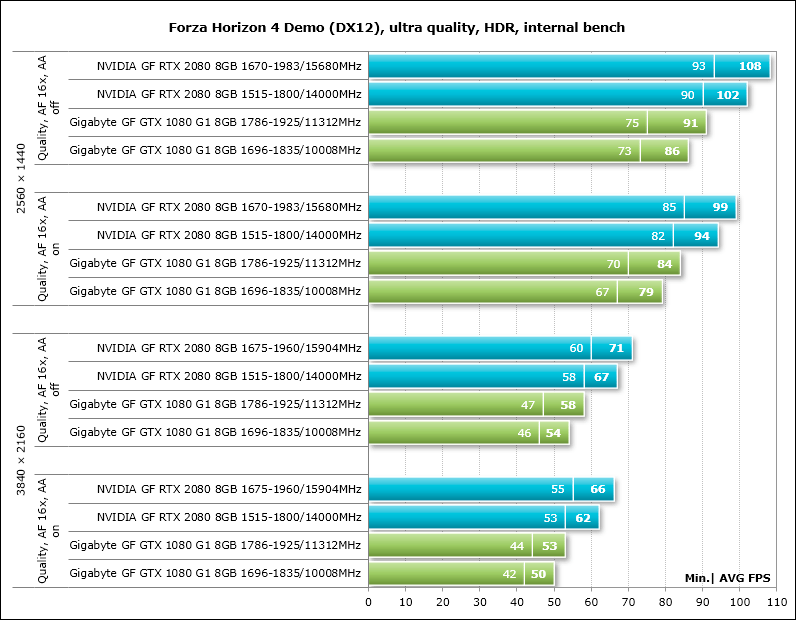

Forza Horizon 4 Demo (DirectX 12) – version 1.192.906.2, all parameters to Ultra or Extreme, HDR activated, built-in benchmark without anti-aliasing and with MSAA4x activation.

Let’s add that if the games have implemented the ability to fix the minimum number of frames per second, then it was also shown in the diagrams. Each test was carried out twice, the best of the two obtained values was taken as the final result, but only if the difference between them did not exceed 1%. If the deviations of the benchmark runs exceeded 1%, then the testing was repeated at least one more time to get a reliable result.

Performance test results

3DMark

Unigine Superposition

Grand Theft Auto V

Tom Clancy’s Rainbow Six: Siege

Hitman

Deus Ex: Mankind Divided

For Honor

Tom Clancy’s Ghost Recon Wildlands

Warhammer 40,000: Dawn of War III

Middle-earth: Shadow of War

Assassin’s Creed: Origins

Call of Duty: WWII

Far Cry 5

F1 2018 Since we are testing F1 2018 and the next three games for the first time, hereinafter we will give the settings with which we conducted the test today and will carry out in the following articles.

Now a diagram with test results.

Strange Brigade

Shadow of the Tomb Raider

Forza Horizon 4 Demo

Let’s supplement the constructed diagrams with a summary table with test results with the displayed average and minimum value of the number of frames per second for each video card.

We will analyze the results using summary diagrams.

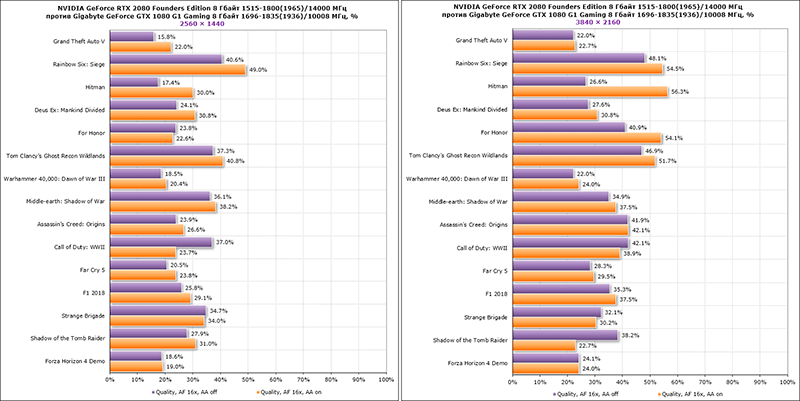

Pivot charts and analysis of results

In the first pair of pivot charts, we compare the performance of the NVIDIA GeForce RTX 2080 Founders Edition versus the Gigabyte GeForce GTX 1080 G1 Gaming at the nominal frequencies of both cards. The results of the GeForce GTX 1080 are taken as the starting point of reference, and the indicators of the GeForce RTX 2080 are given as a percentage of it.

At a resolution of 2560 × 1440 pixels, the novelty outperforms its predecessor on average in all games by 26.8-29.4%, the minimum advantage of 15.6% can be observed in the game Grand Theft Auto V, and the maximum in Rainbow Six: Siege, where it is reaches 49%. However, the full extent of RTX is revealed at a resolution of 3840 × 2160 pixels, or 4K, as it is commonly called. Here, the new NVIDIA GeForce RTX 2080 Founders Edition, on average across all games, demonstrates an increase of 34.1-37.1% with a peak of 56.3% in the most resource-intensive Hitman game mode. Since the difference in frequencies of the compared video cards is small, it is obvious that the Turing architecture is much more efficient and productive than Pascal.

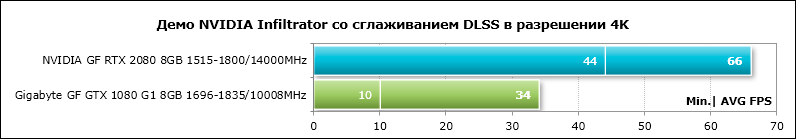

Moreover, if we talk about games in the near future, then the advantage of the GeForce RTX 2080 over the GeForce GTX 1080 will be twice or more. In particular, we can cite as an example the NVIDIA Infiltrator demo on the UE4 engine in 4K, where the new DLSS anti-aliasing technology works on RTX, and TAA-anti-aliasing is used on the GTX .

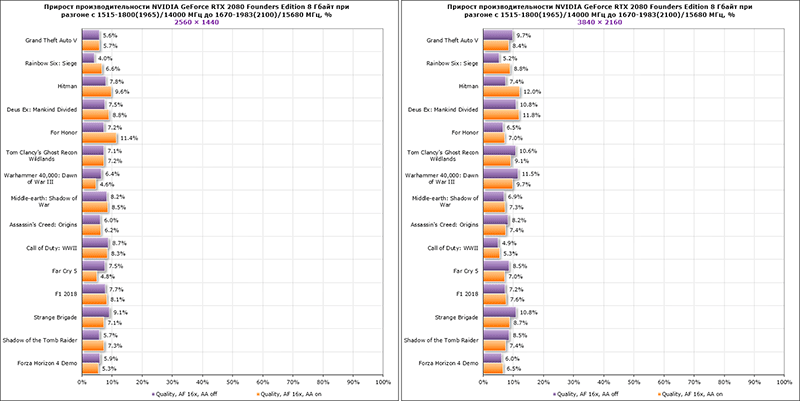

As you can see, the new video card is almost twice as fast as its predecessor, and at the same time, the quality of the picture is not inferior to a single pixel. In the second pair of pivot charts, we estimate the 10.2% performance gain when overclocking the NVIDIA GeForce RTX 2080 Founders Edition GPU and 13.6% VRAM.

The overclocked video card turned out to be 7.0-7.3% faster than the nominal one at 2560 × 1440 pixels and by 8.2-8.3% at 3840 × 2160 pixels.

Energy consumption

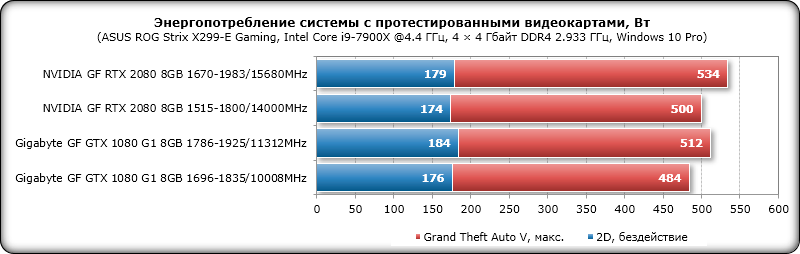

The energy consumption was measured using a Corsair AX1500i power supply via the Corsair Link interface and the HWiNFO64 monitoring utility version 5.90-3550. The energy consumption of the entire system as a whole was measured without taking into account the monitor. Testing was carried out in 2D mode with normal work in Microsoft Word or Internet surfing, as well as in 3D mode. In the latter case, the load was created using two consecutive cycles of the Grand Theft Auto V game benchmark at 2560 × 1440 pixels at maximum graphics quality settings using MSAA 4X. CPU power saving technologies are disabled in the motherboard BIOS. Let’s compare the level of power consumption of systems with the video cards tested today according to the results in the diagram.

The system with NVIDIA GeForce RTX 2080 Founders Edition surprised by a very small increase in the level of power consumption compared to the configuration with the Gigabyte GeForce GTX 1080 G1 Gaming, since according to the specifications of the video cards, the difference in the maximum power consumption level should be about 55 watts, but in practice we got no more than 20 watts … As for overclocking, here the increase was only 34 watts and did not exceed 550 watts at the maximum. Considering that we are testing the video card on a fairly productive and expensive configuration (and even in overclocking), it is obvious that NVIDIA was overcautious, recommending a 650-watt power supply for the system with the new GeForce RTX 2080.

Conclusion

In our opinion, the only problem with NVIDIA GeForce RTX 2080 Founders Edition is its declared cost, which is much higher than the recommended price for GeForce GTX 1080. If NVIDIA released a new line of video cards at the prices of the old one, then it would be GeForce RTX 2080 that would be an unconditional hit and, quite probably the best seller. But this did not happen and there are reasons for this, which we talked about in the article, and we also add here the complete lack of competition in the segment of flagship products. Therefore, if gamers have a desire to get all new technologies and a reserve for the future, then there is simply no choice today, and NVIDIA in its desire to compensate for all the funds invested in the development of Turing and make money is quite understandable.

Leaving aside the pricing issues, we must admit that the NVIDIA GeForce RTX 2080 is a breakthrough video card that already today and in today’s games demonstrates a significant performance increase in comparison with its predecessor, the GeForce GTX 1080, and in future games this advantage will multiply. NVIDIA Turing architecture and graphics cards based on it brought ray tracing and “free” HDR, tensor cores and anti-aliasing DLSS, Mesh Shaders, GDDR6 and high-speed NVLink, USB 3.1 Gen2 and VirtualLink to the consumer graphics segment. The reference video cards, simultaneously with the increase in frequencies, began to be equipped with a more efficient cooling system, which remains comfortable in terms of noise level even in 3D load. Let’s not forget the fully automatic GPU overclocking available only on GeForce RTX.