Review AMD Radeon RX Vega 64 Gaming: Benchmark | Power Supply | Specs – Review and testing of the AMD Radeon RX Vega 64 video card – The announcement of the new AMD Radeon RX Vega 64 video card took place in mid-August 2017, but if you closely follow the news on the topic of graphics cards, you see that the original versions of this video card model began to “catch up” on the market only by the end of November or early December.

The reason for this is not only the shortage of GPUs and the ongoing cryptocurrency boom, but also the modest volumes of HBM2 memory release, as well as the complexity of their integration. As a result, only a quarter later, users began to receive the opportunity to choose from the original versions. At the same time, the reference models are not going to disappear from the market, what is not a reason to refresh the memory of the test results of such a video card and remember what its performance is against the background of other classmates?

1. Review of the AMD Radeon RX Vega 64 8 GB video card

technical characteristics of the video card and the recommended cost Technical characteristics and cost of the AMD Radeon RX Vega 64 video card are shown in the table in comparison with the reference versions of the AMD Radeon R9 Fury X and Radeon RX 580.

packaging and equipment AMD has provided us for temporary testing of its proprietary AMD Radeon RX Vega 64 kit, consisting of two boxes. The large one contains the video card itself, and the small one contains all sorts of different information materials and souvenirs.

The latter include a quick guide, stickers, a silicone bracelet, and a memento from a separate Vega 10 XT GPU.

The minimum cost of a reference video card in foreign online stores starts from 535 euros, however, such video cards are often not available, but you can buy a liquid-cooled Radeon RX Vega 64 at a price of 700 euros. In Russia, the situation is not much better, since such video cards now cost almost 50 thousand rubles and even more, if, of course, they can be obtained at all. Let’s hope that with the gradual filling of the market with the original Radeon RX Vega 64 models, the price situation will start to improve. PCB design and features

Outwardly, the reference AMD Radeon RX Vega 64 looks very simple, since it is made according to the already classic canons. Here we see a rectangular parallelepiped, closed on all sides with plastic and metal, and the turbine and the name of the video card in the front of the front casing are visible in front.

On the back is a reinforcement plate opposite the GPU area, as well as a small backlight switch. If nothing interesting can be noted from below, then the same inscription is visible above, as on the front on the casing, two eight-pin connectors for additional power supply and a small switch.

The latter is responsible for choosing the BIOS with which the video card will work. One of the BIOS reference samples AMD Radeon RX Vega 64 has lower power limits.

Let’s add here that the dimensions of the video card are equal to 269 x 102 x 40 mm, and it weighs 1084 grams. Four video outputs are displayed on the panel with AMD Radeon RX Vega 64 outputs: one HDMI 2.0b and three DisplayPort 1.4.

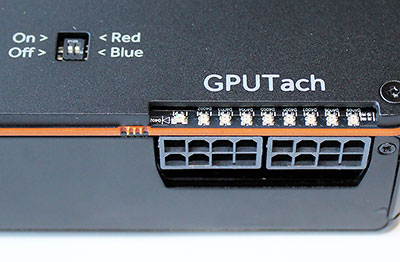

As you can see, the remaining area of this panel is perforated with slots for the outflow of the air heated by the video card outside the system unit case. For additional power, the video card received two eight-pin connectors, above which there is a line of AMD GPUTach LEDs.

With its help, you can monitor the degree of loading of the video card in real time, and the color of the LEDs changes using two adjacent switches. Power consumption declared in the characteristics of AMD Radeon RX Vega 64 can reach 295 watts (for the Liquid Cooled Edition up to 345 watts), and the recommended power supply for a system with one such video card should be at least 750 watts. Let’s take a look at the PCB of the AMD Radeon RX Vega 64 reference instance.

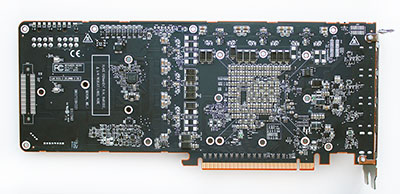

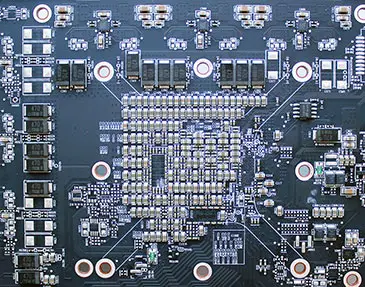

The power supply system is located mainly on the front side of the printed circuit board, but is additionally reinforced with solid-state capacitors on the back side of the PCB. Of the thirteen phases, twelve are allocated to power the graphics processor, and one to the video memory, which is located on its own substrate.

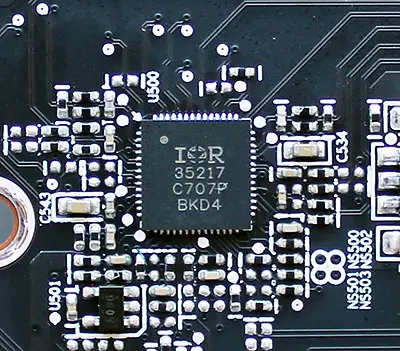

Infineon’s IR 35217 controller is responsible for GPU power management.

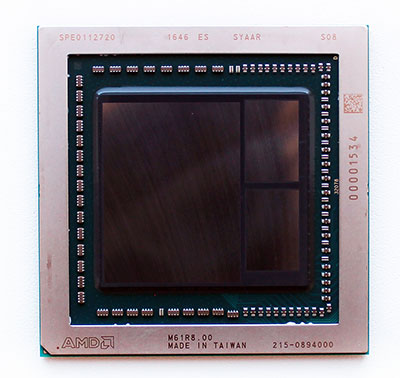

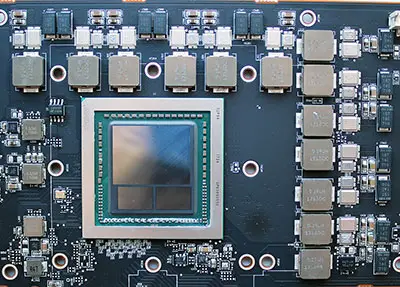

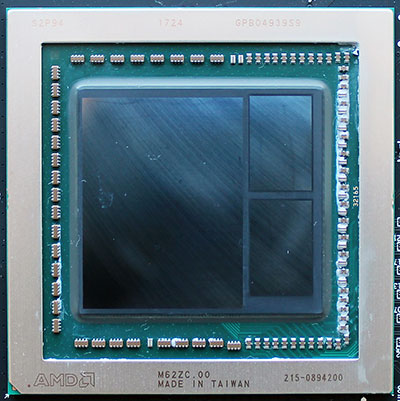

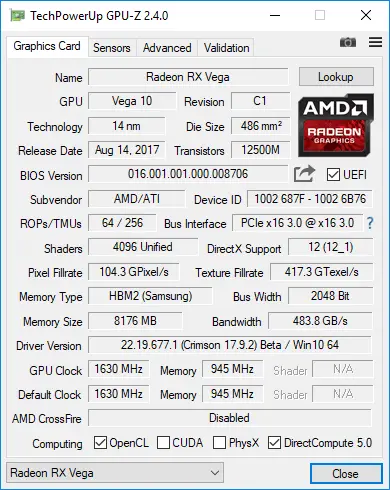

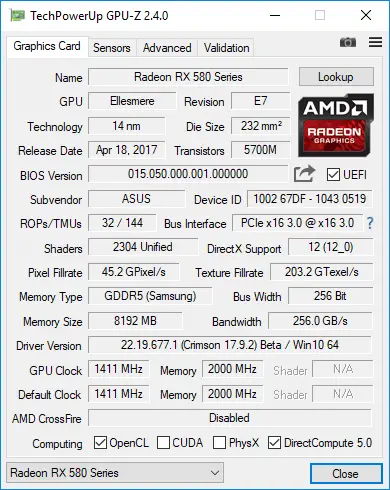

Built on the advanced NCU (Next-generation Compute Unit) or GCN 1.4 architecture, the 14nm Vega 10 XT GPU has a die area of 486 mm2, and it was released on the 24th week of 2017 (mid-June).

Recall that the number of unified shader processors here is equal to 4096, texture units – 256, ROP units – 64. The same order of numbers is found in AMD Radeon R9 Fury X, but its Fiji XT is built using only GCN 1.2 architecture, fewer transistors and 28nm process technology. In 3D mode, the GPU should operate at frequencies from 1247 to 1546 MHz, and with increased power limits and low temperatures, the GPU frequency can reach an impressive 1630 MHz for AMD. We were also impressed by the decrease in the core frequency in 2D modes, when it drops to 27 MHz.

Eight gigabytes of HBM2 memory are packed with two Samsung chips, which are located on the same substrate as the graphics processor. The effective frequency of such microcircuits is 1890 MHz, which, with a memory bus width of 2048 bits, provides the video card with a bandwidth of 484 GB / s. In 2D mode, the memory frequency is reduced to 334 MHz. Here’s what the characteristics of an AMD Radeon RX Vega 64 look like as interpreted by the GPU-Z utility.

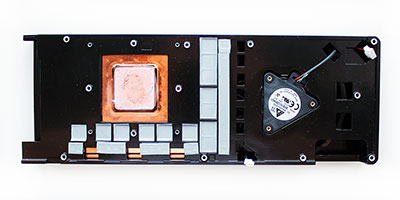

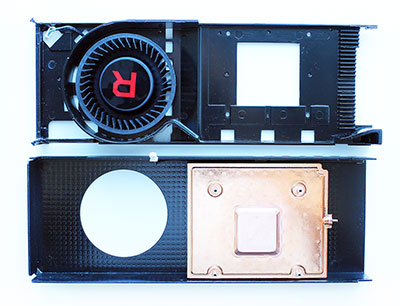

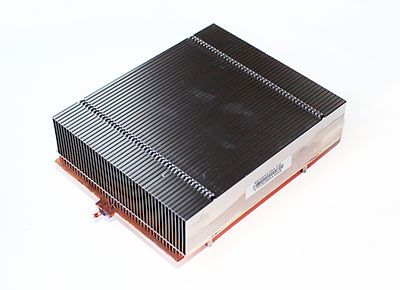

Cooling System The cooling system of the reference AMD Radeon RX Vega 64 is as simple in design as the design of a graphics card.

In addition to the plastic casing, it includes three more parts: a radiator with a copper evaporation chamber, a metal heat-distributing plate and a radial fan.

Thin aluminum fins are soldered to the copper base of the evaporation chamber, 1.5 mm apart. It is he who dissipates the main flow of heat, since it works simultaneously with both the GPU and video memory.

Through these fins, the air flow is pumped by a 70-mm turbine manufactured by Delta Electronics with the marking BFB1012SHA01 .

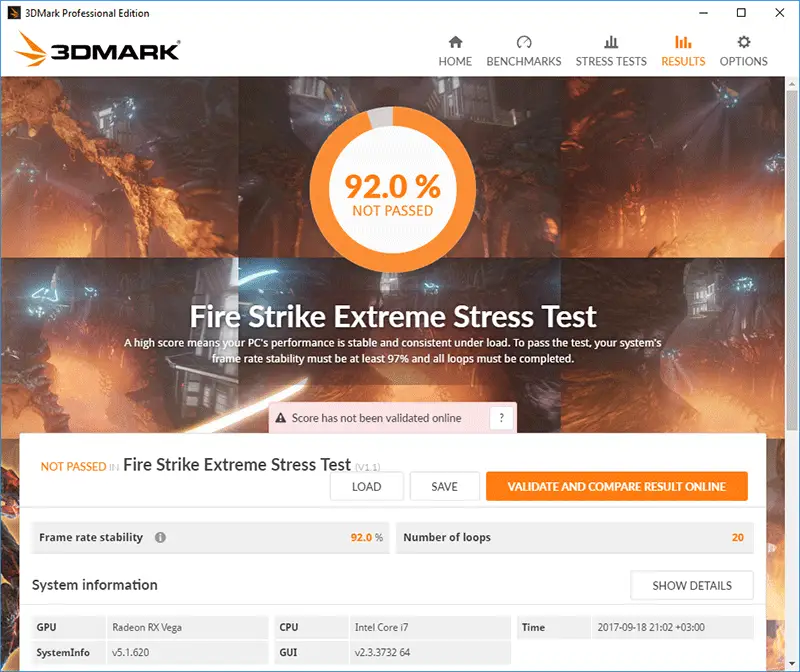

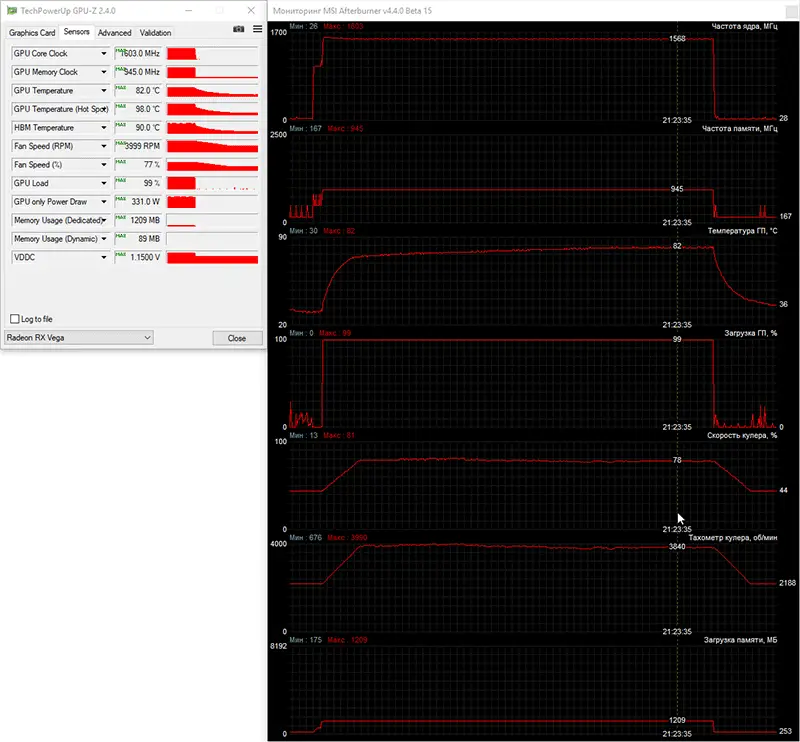

Its rotation speed is regulated by pulse-width modulation in the range from 670 to 4000 rpm (according to monitoring data). At maximum speed, the video card makes a brutal noise. To check the temperature regime of the video card as a load, we used nineteen cycles of the Fire Strike Extreme stress test from the 3DMark graphics package.

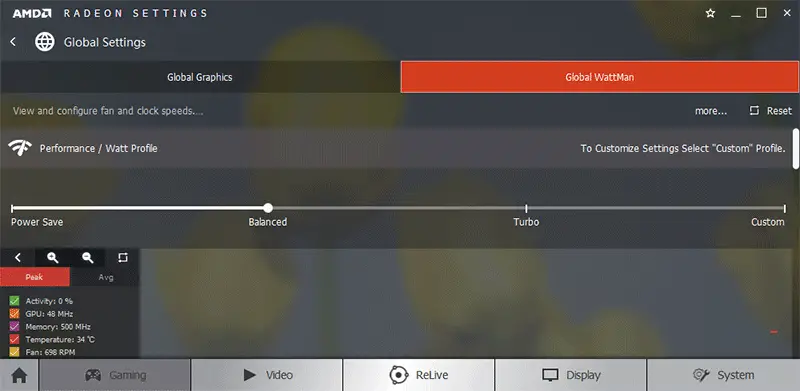

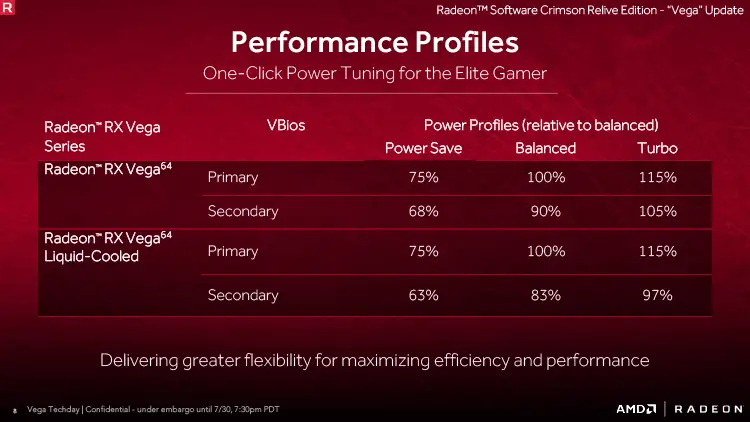

To monitor temperatures and all other parameters, we used MSI Afterburner version 4.4.0 Beta 15 and GPU-Z utility version 2.4.0. The tests were carried out in a closed case of the system unit , the configuration of which you can see in the next section of the article, at a room temperature of about 22.0 degrees Celsius. We carried out tests of the temperature regime of the reference AMD Radeon RX Vega 64 in all three modes available in the AMD Crimson drivers.

Their settings profiles are as follows.

And now the test results in each of the modes of operation of the video card with the main BIOS.

Let’s break them down one by one. In power saving mode, the video card operates at frequencies up to 1341 MHz (1258 MHz on average), its graphics processor warms up only to 75 degrees Celsius, and the memory – up to 82 degrees Celsius. At the same time, the turbine does not unwind excessively. In the Balanced mode, which is used by default in the drivers, the video card was already running at GPU frequencies up to 1488 MHz, and the average frequency is kept at around 1441 MHz. Temperatures have risen, but not critical: up to 83 degrees Celsius for the GPU and up to 92 degrees Celsius for HBM2, and the cooler fan began to rotate 230 rpm faster. Finally, Turbo mode increases the power limit by 15% and the maximum GPU frequency already rises to 1531 MHz at 1500 MHz on average. All temperatures increased by two degrees Celsius and the turbine speed increased by 60 rpm. By the way,

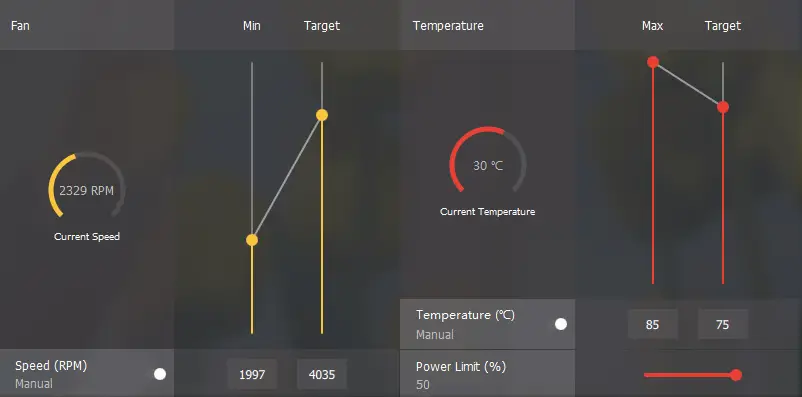

Next, we tried to configure the video card so that it was as fast as possible in terms of the GPU. To do this, we set the operation of the cooler fan in such a way that already upon reaching the GPU temperature of 75 degrees Celsius, it would accelerate to maximum speed, and the Power Limit was increased by 50%.

With the help of such simple manipulations, the graphics processor was briefly able to operate at a frequency of 1603 MHz, and then its frequency stabilized at 1568 MHz.

Of course, the noise level of the video card in this operating mode is literally unbearable, but this will allow us to estimate what performance the original versions of the Radeon RX Vega 64 with very efficient coolers can demonstrate. By the way, recently among enthusiasts there has been a tendency to “downvolting” AMD graphics processors, which allows achieving not only lower temperatures and stable frequencies, but also reducing the noise level of coolers of such video cards. You can read about it in this thread or watch the video .

2. Test configuration, tools and testing methodology

The video cards were tested on a system with the following hardware configuration:

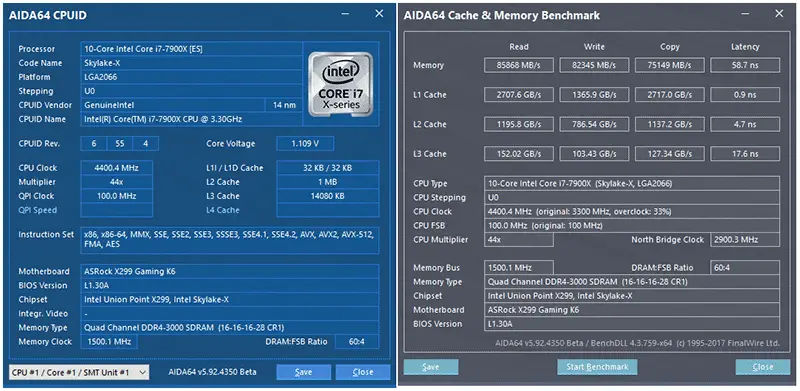

motherboard: ASRock Fatal1ty X299 Gaming K6 (Intel X299 Express, LGA2066, BIOS L1.30A beta from 15.08.2017);

central processor: Intel Core i9-7900X (14 nm, Skylake-X, U0, 3.3-4.5 GHz, 1.1 V, 10 x 1 MB L2, 13.75 MB L3);

CPU cooling system: Noctua NH-D15 (2 NF-A15, 800 ~ 1500 rpm);

thermal interface: ARCTIC MX-4 (8.5 W / (m * K);

RAM: DDR4 4 x 4 GB Corsair Vengeance LPX 2800 MHz (CMK16GX4M4A2800C16) (XMP 2800 MHz / 16-18-18-36_2T / 1.2 V or 3000 MHz / 16-18-18-36_2T / 1.35 V);

video cards:

MSI GeForce GTX 1080 Ti Lightning 11 GB / 352 bit 1481-1582 (1911) / 11008 MHz;

AMD Radeon RX Vega 64 8 GB / 2048 bit 1460-1603 / 1890 MHz;

Gigabyte GeForce GTX 1080 G1 Gaming 8 GB / 256 bit 1696-1835 (1936) / 10008 MHz;

ASUS ROG Strix Radeon RX 580 8 GB / 256 bit 1411/8000 MHz;

disk for system and games: Intel SSD 730 480 GB (SATA III, BIOS vL2010400);

benchmark disk: Western Digital VelociRaptor 300 GB (SATA II, 10,000 rpm, 16 MB, NCQ);

archive disk: Samsung Ecogreen F4 HD204UI 2 TB (SATA II, 5400 rpm, 32 MB, NCQ);

sound card: Auzen X-Fi HomeTheater HD;

case: Thermaltake Core X71 (five be quiet! Silent Wings 2 (BL063) at 900 rpm);

control and monitoring panel: Zalman ZM-MFC3;

PSU: Corsair AX1500i Digital ATX (1500 W, 80 Plus Titanium), 140 mm fan;

monitor: 27-inch Samsung S27A850D (DisplayPort, 2560 x 1440, 60 Hz).

When evaluating the performance of AMD Radeon RX Vega 64, the top benchmark today will be NVIDIA GeForce GTX 1080 Ti in the face of the original MSI GeForce GTX 1080 Ti Lightning 11 GB graphics card, and even a slightly cheaper than Vega 64 video card Gigabyte GeForce GTX will become a direct competitor. 1080 G1 Gaming.

In addition to these video cards, the testing included ASUS ROG Strix Radeon RX 580 8 GB, as the previous two Vega (64/56) video cards in the current AMD line (you can hardly buy the Radeon R9 Fury X anymore).

Note that the power and temperature limits on video cards were increased to the maximum possible, the GeForce drivers were prioritized for maximum performance, and the Turbo mode was activated in the Crimson driver for AMD Radeon RX Vega 64 and the HBCC (High Bandwidth Cache Controller) function was enabled. To reduce the dependence of the performance of video cards on the platform speed, a 14-nm ten-core processor with a multiplier of 44, a reference frequency of 100 MHz and the Load-Line Calibration function activated to the second level was overclocked to 4.4 GHz across all cores simultaneously when the voltage in the motherboard BIOS was increased to 1.11 V.

At the same time, 16 gigabytes of RAM functioned in a four- channel mode at a frequency of 3.0 GHz with timings of 16-16-16-28 CR1 at a voltage of 1.35 V. Testing, which began on September 23, 2017, was carried out under the control of the Microsoft Windows operating system. 10 Pro (1703 15063.608) with all updates on the specified date and with the installation of the following drivers:

motherboard chipset Intel Chipset Drivers – 10.1.1.44 WHQL from 05.24.2017 ;

Intel Management Engine Interface (MEI) – 11.7.0.1037 WHQL from 09/14/2017 ;

drivers for video cards on graphics processors NVIDIA – GeForce 385.69 WHQL from 09/21/2017 ;

video card drivers on an AMD graphics processor – Crimson ReLive 17.9.2 beta from 09/21/2017 .

In today’s testing, we only used 2560 x 1440 pixels. Two graphics quality modes were used for the tests: Quality + AF16x – texture quality in the drivers by default with anisotropic filtering at 16x and Quality + AF16x + MSAA 4x / 8x with anisotropic filtering at 16x and full-screen anti-aliasing at 4x or 8x. In some games, due to the specifics of their game engines, other anti-aliasing algorithms were used, which will be indicated later in the methodology and directly on the diagrams. Anisotropic filtering and full-screen anti-aliasing was enabled in the game settings. If these settings were absent in games, then the parameters were changed in the control panels of the GeForce or Crimson drivers. V-Sync was also forcibly disabled there. In addition to the above,

As in the article about the ASUS ROG Strix Radeon RX 580 8 GB , the video cards were tested in two graphics tests and fourteen games, updated to the latest versions as of the date of the preparation of the material. The list of test applications is as follows (games and further test results in them are arranged in the order of their official release):

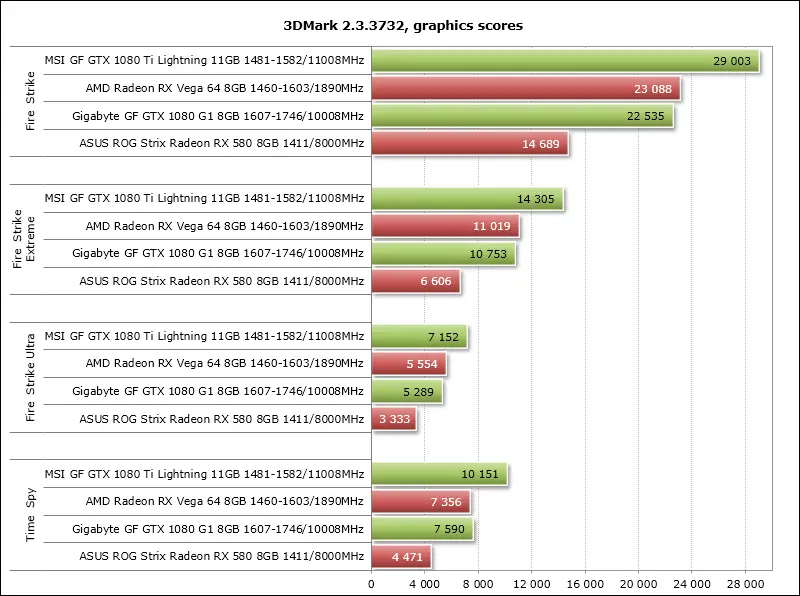

3DMark (DirectX 9/11/12) – version 2.3.3732, testing in the Fire Strike, Fire Strike Extreme, Fire Strike Ultra and Time Spy scenes (the graph shows the graphical score);

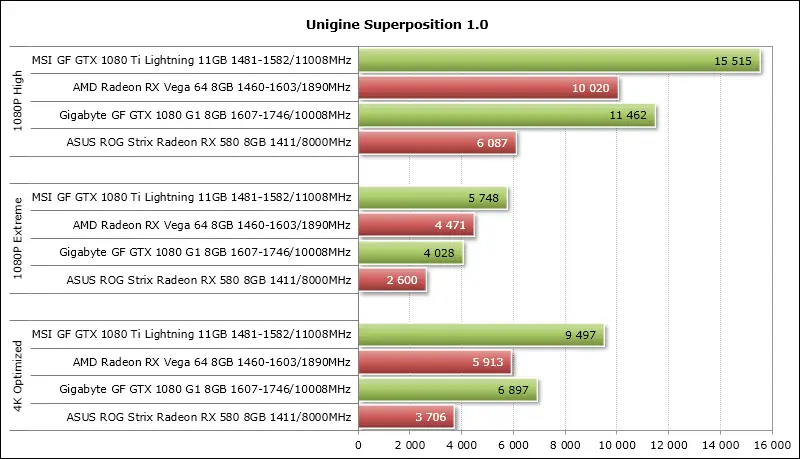

Unigine Superposition (DirectX 11) – version 1.0, tested in 1080P High, 1080P Extreme and 4K Optimized settings;

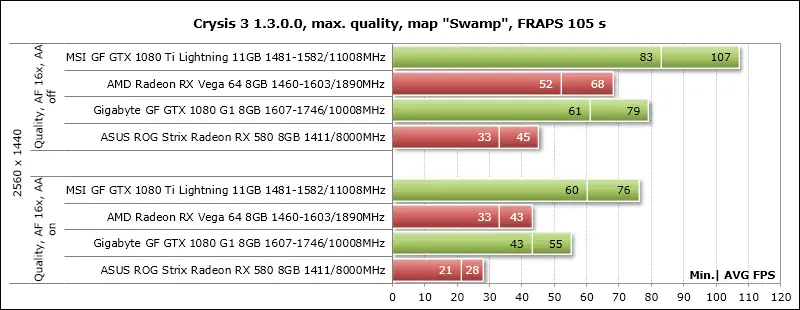

Crysis 3 (DirectX 11) – version 1.3.0.0, all graphics quality settings to maximum, the degree of blur is medium, reflections are on, modes with FXAA and with MSAA 4x, double consecutive pass of a scripted scene from the beginning of the Swamp mission lasting 105 seconds;

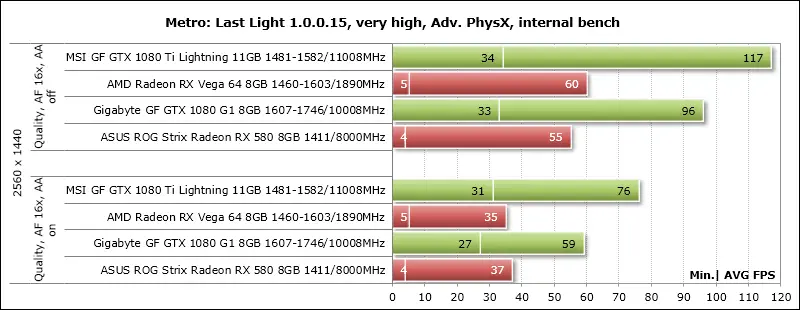

Metro: Last Light(DirectX 11) – version 1.0.0.15, the built-in game test was used, graphics quality settings and tessellation at the Very High level, Advanced PhysX technology is activated, tests with SSAA and without anti-aliasing, double sequential run of the D6 scene;

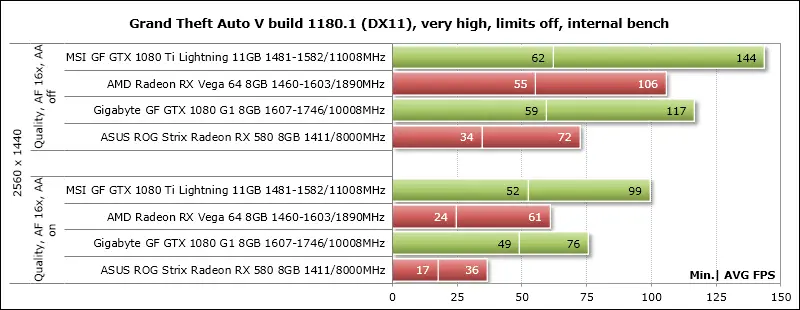

Grand Theft Auto V (DirectX 11) – build 1180.1, quality settings at Very High, ignoring suggested restrictions enabled, V-Sync disabled, FXAA enabled, NVIDIA TXAA disabled, MSAA for reflections disabled, NVIDIA soft shadows;

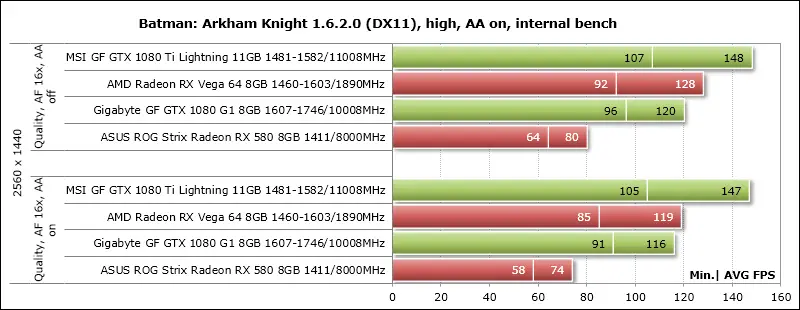

Batman: Arkham Knight (DirectX 11) – version 1.6.2.0, quality settings at High, Texture Resolutioin normal, Anti-Aliasing on, V-Sync disabled, tests in two modes – with and without activation of the last two NVIDIA GameWorks options, double sequential run of the test built into the game;

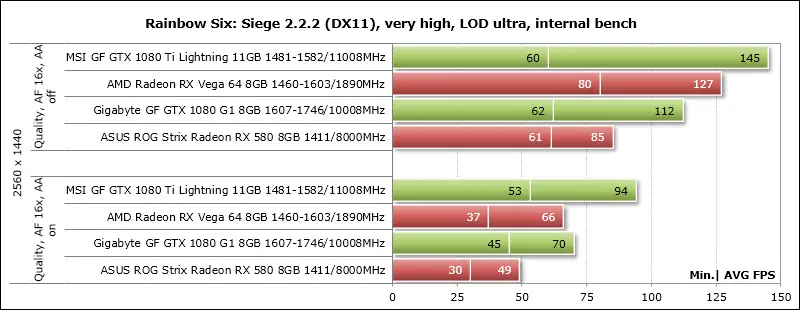

Tom Clancy’s Rainbow Six: Siege (DirectX 11) – version 2.2.2, texture quality settings at the Very High level, Texture Filtering – Anisotropic 16X and other maximum quality settings, tests with MSAA 4x and without anti-aliasing, double sequential run of the test built into the game …

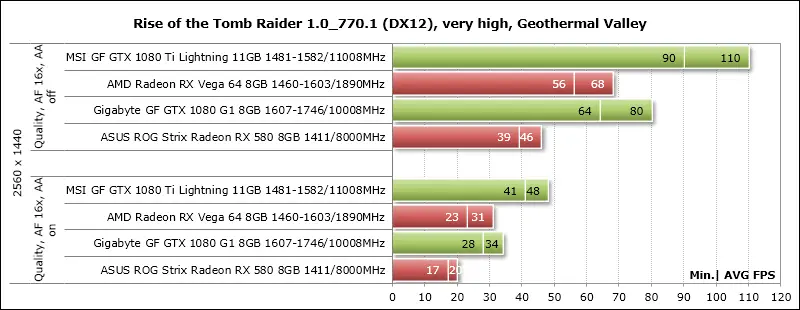

Rise of the Tomb Raider (DirectX 12) – version 1.0 build 770.1_64, all parameters to the Very High level, Dynamic Foliage – High, Ambient Occlusion – HBAO +, tessellation and other quality improvement techniques are activated, two test cycles of the built-in benchmark (Geothermal scene Valley) without anti-aliasing and with SSAA 4.0 activation;

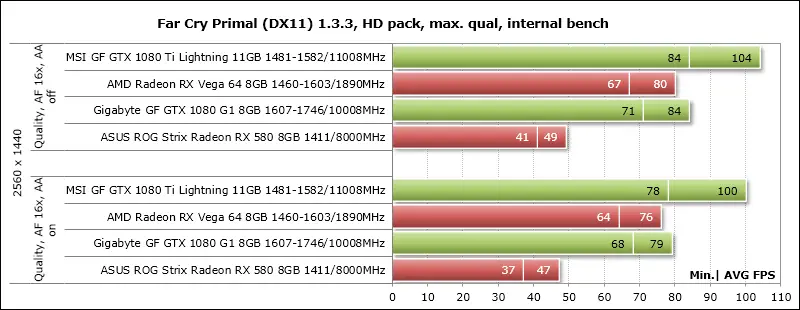

Far cry primal(DirectX 11) – version 1.3.3, maximum quality level, high-resolution textures, volumetric fog and shadows to maximum, built-in performance test without anti-aliasing and with SMAA Ultra enabled;

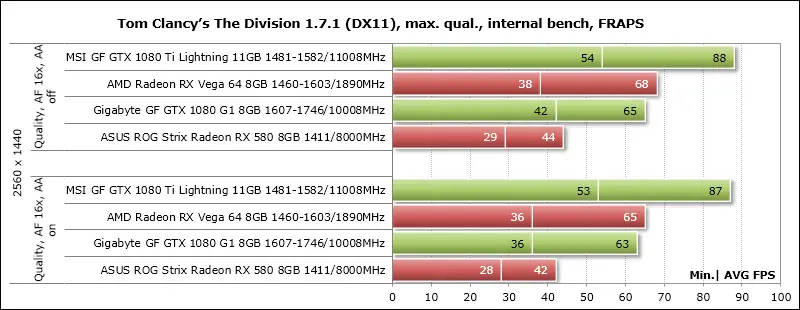

Tom Clancy’s The Division (DirectX 11) – version 1.7.1, maximum quality level, all picture enhancement parameters are activated, Temporal AA – Supersampling, testing modes without anti-aliasing and with SMAA 1X Ultra activation, built-in performance test, but fixing FRAPS results;

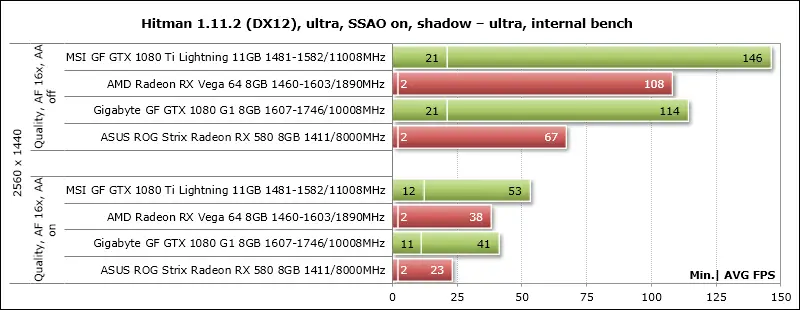

Hitman (DirectX 12) – version 1.12.1, built-in test with graphics quality settings at the “Ultra” level, SSAO enabled, shadow quality “Ultra”, memory protection disabled;

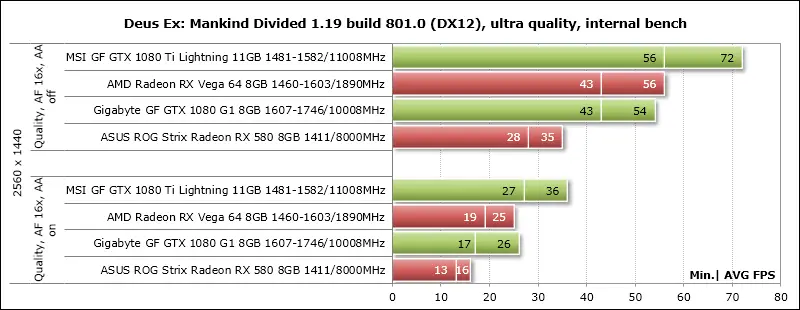

Deus Ex: Mankind Divided(DirectX 12) – version 1.19 build 801.0, all quality settings are manually set to the maximum level, tessellation and depth of field are activated, at least two consecutive runs of the benchmark built into the game;

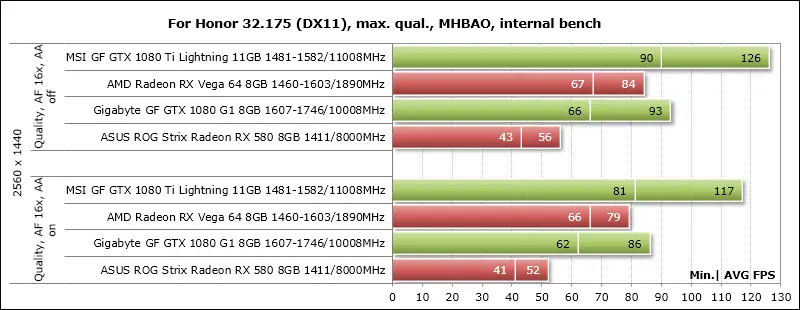

For Honor (DirectX 11) – version 32.175, maximum graphics quality settings, volumetric lighting – MHBAO, dynamic reflections and blur effect enabled, anti-aliasing oversampling disabled, tests without anti-aliasing and with TAA, double sequential run of the benchmark built into the game;

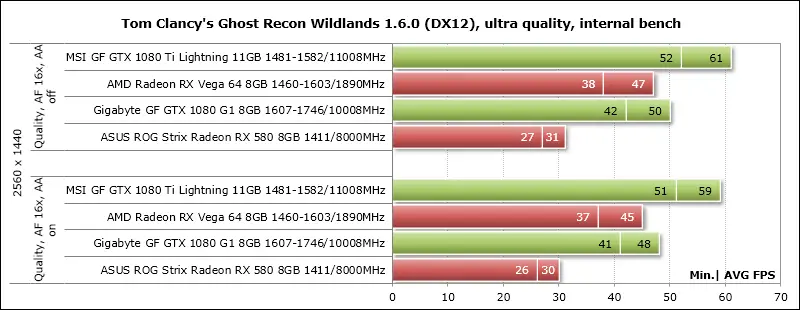

Tom Clancy’s Ghost Recon Wildlands (DirectX 12) – version 1.6.0, graphics quality settings to maximum or Ultra-level, all options are activated, tests without anti-aliasing and with SMAA + FXAA, double sequential run of the benchmark built into the game;

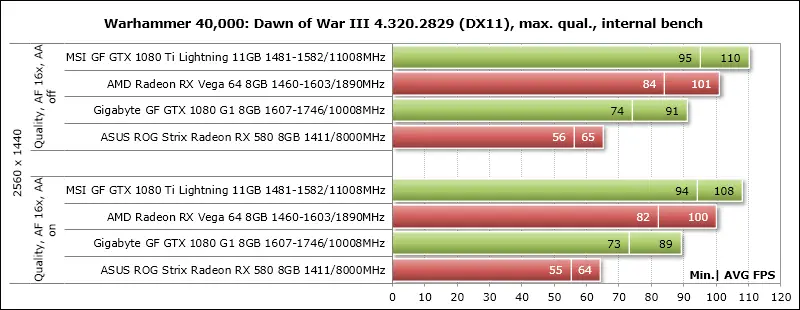

Warhammer 40,000: Dawn of War III (DirectX 11) – version 4.320.2829.17945, all graphics quality settings to the maximum level, double sequential run of the benchmark built into the game;

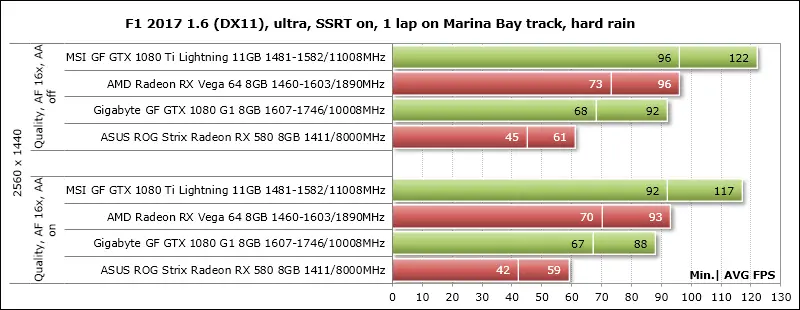

F1 2017 (DirectX 11) – version 1.6, the built-in game test was used on the Marina Bay track in Singapore during a rainstorm, graphics quality settings were set to the maximum level for all points, SSRT shadows were activated, tests with TAA and without anti-aliasing.

Let’s add that if the games have implemented the ability to fix the minimum number of frames per second, then it was also shown in the diagrams. Each test was carried out twice, the best of the two obtained values was taken as the final result, but only if the difference between them did not exceed 1%. If the deviations of the benchmark runs exceeded 1%, then the testing was repeated at least one more time to get a reliable result.

3. Results of tests of performance of video cards

3DMark

Unigine Superposition

Crysis 3

Metro: Last Light

Grand Theft Auto V

Batman: Arkham Knight

Tom Clancy’s Rainbow Six: Siege

Rise of the Tomb Raider

Far Cry Primal

Tom Clancy’s The Division

Hitman

Deus Ex: Mankind Divided

For Honor

Tom Clancy’s Ghost Recon Wildlands

Warhammer 40,000: Dawn of War III

F1 2017

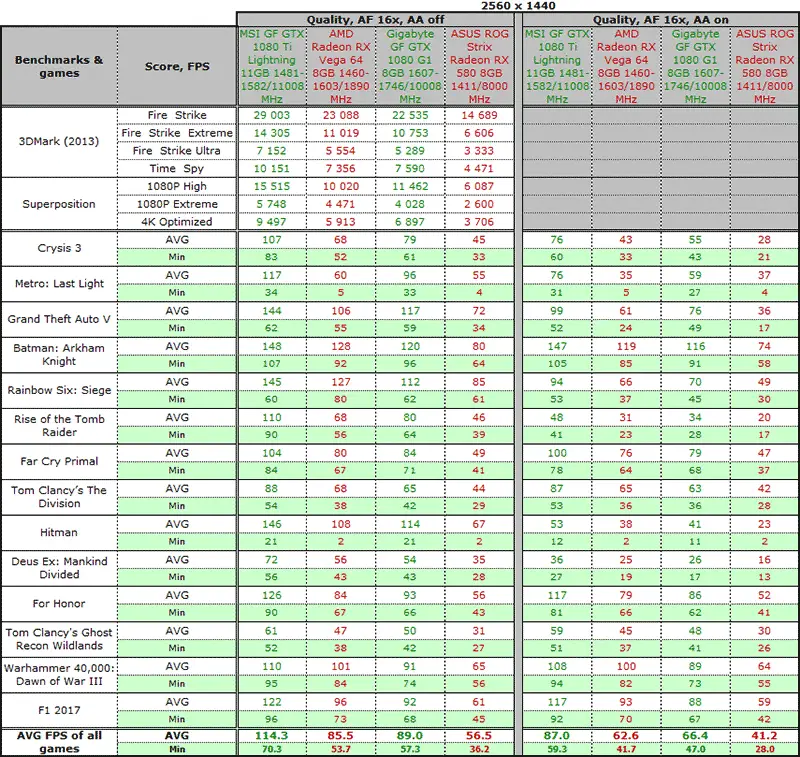

Let’s supplement the constructed diagrams with a summary table with test results with the displayed average and minimum value of the number of frames per second for each video card.

The analysis of individual results will be carried out according to the summary diagrams.

4. Summary charts and analysis of results

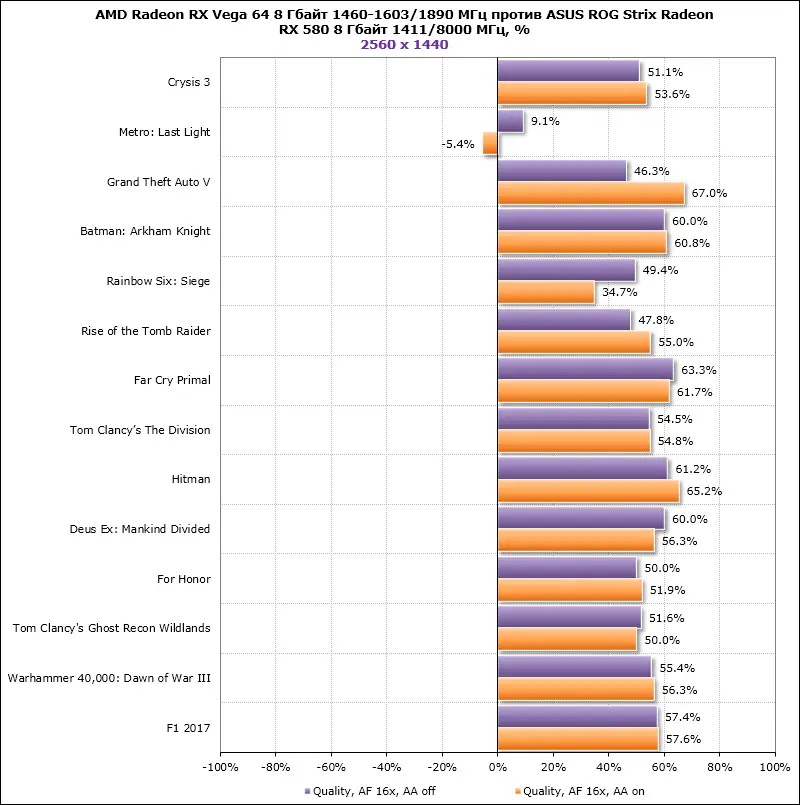

On the first summary diagram, we propose to evaluate the performance of AMD Radeon RX Vega 64 in comparison with ASUS ROG Strix Radeon RX 580. It is clear that for the price of one Vega-64 you can buy a pair of RX 580s and put them in CrossFireX, but in practice this comparison useful for those who plan to change the Radeon RX 580 to the Radeon RX Vega 64 in the future.

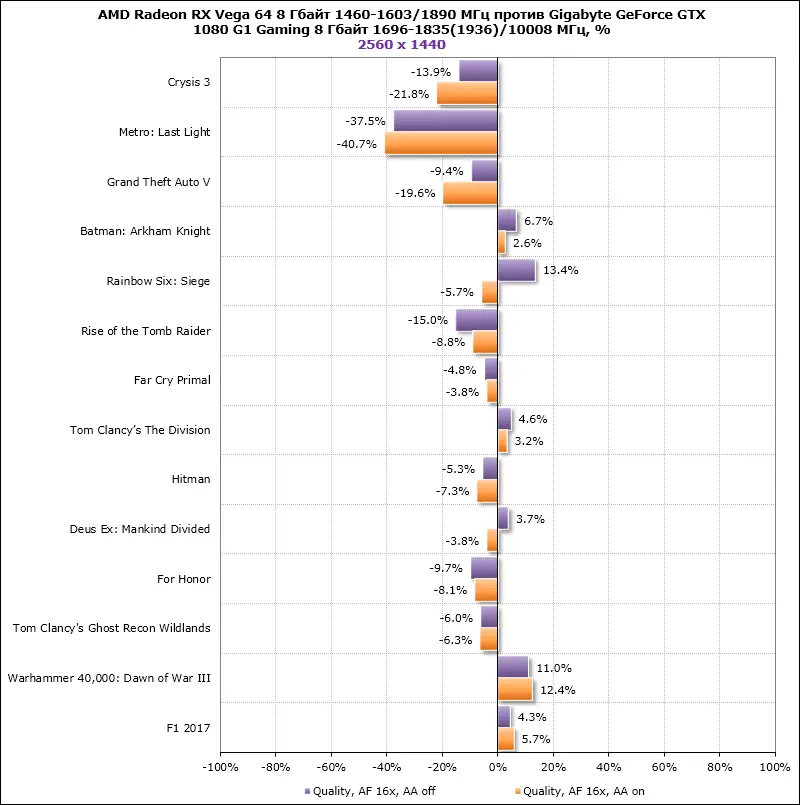

Except for the results in Metro: Last Light, where the performance of AMD video cards is limited by NVIDIA Advanced PhysX technology, in all other games the difference is quite noticeable and on average for all games exceeds 51% in both quality modes. Next, let’s compare the reference AMD Radeon RX Vega 64 with the original Gigabyte GeForce GTX 1080 G1 Gaming, when the results of the last video card we took as the zero axis, and the indicators of the Radeon RX Vega 64 were postponed as a percentage of it.

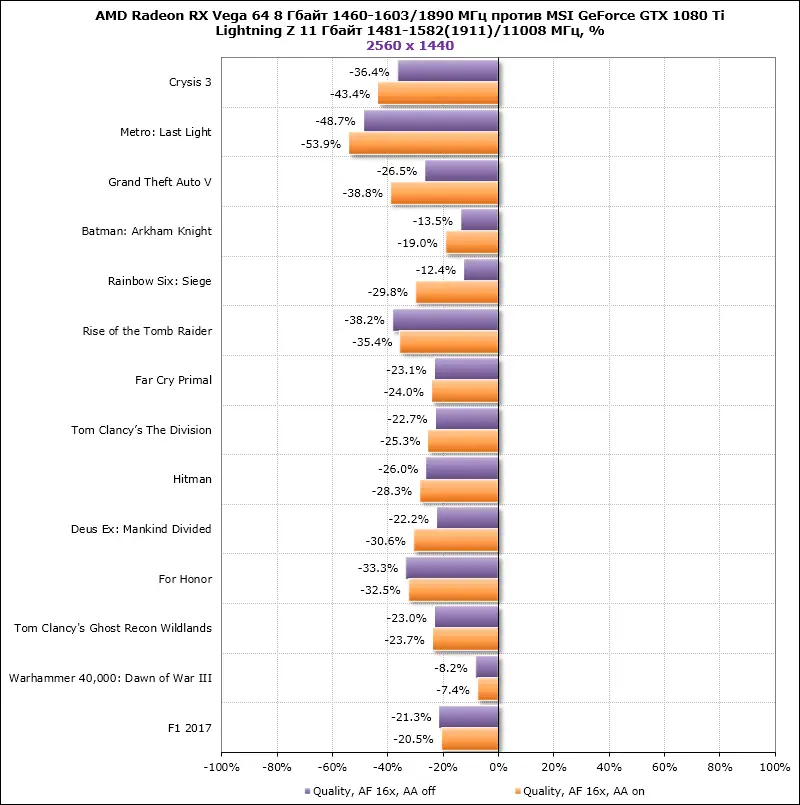

Only in such old games as Crysis 3, Metro: Last Light or Grand Theft Auto V the video card on Vega 10 is inferior quite noticeably, and in the rest the results are already denser. However, in simple terms of numbers, AMD Radeon RX Vega 64 outperformed the GeForce GTX 1080 only in Batman: Arkham Knight, Tom Clancy’s The Division, Warhammer 40,000: Dawn of War III and F1 2017, as well as without anti-aliasing in Rainbow Six: Siege and Deus Ex: Mankind Divided. Otherwise, the Gigabyte GeForce GTX 1080 G1 Gaming is faster and cheaper. In the third pivot chart, it remains for us to find out how much AMD needs to increase the performance of its flagship in order to compete with today’s flagship NVIDIA: next in line is the comparison of the Radeon RX Vega 64 with the MSI GeForce GTX 1080 Ti Lightning.

As you can see, the difference is quite significant. If we take the average for all games, then in the class of their fastest single-processor video cards AMD is 25.4% slower than NVIDIA in modes without anti-aliasing and by 29.5% when its various modes are activated. In other words, AMD is one generation behind in the top class. Let’s hope that it will catch up with this lag, especially since NVIDIA is in no hurry to bring Volta to the market.

5. Power consumption

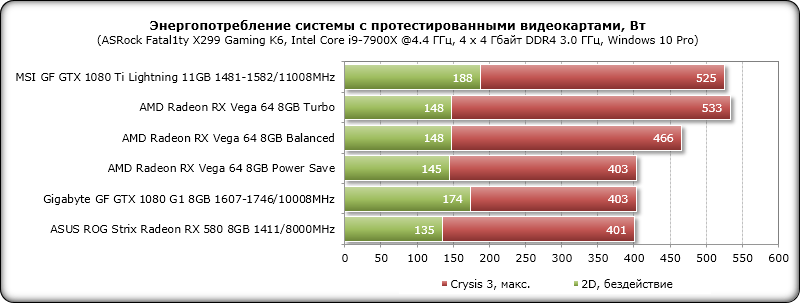

The energy consumption was measured using a Corsair AX1500i power supply unit via the Corsair Link interface and the program of the same name, version 4.8.3.8. The energy consumption of the entire system as a whole was measured, excluding the monitor. The measurement was carried out in 2D mode during normal work in Microsoft Word or Internet surfing, as well as in 3D mode. In the latter case, the load was created using four consecutive cycles of the Swamp-level intro scene from Crysis 3 at 2560 x 1440 pixels at maximum graphics quality settings using MSAA 4X. CPU power saving technologies are disabled in the motherboard BIOS. We add that we tested AMD Radeon RX Vega 64 in three operating modes: energy efficient, balanced and forced.

Let’s evaluate the power consumption of systems with the graphics cards tested today using the results of the diagram.

In terms of power consumption, a system with AMD Radeon RX Vega 64 is comparable to a configuration with a GeForce GTX 1080 only in Power Save mode, but already in balanced mode, the system with it starts to consume 63 watts more at peak load, and in Turbo another 67 watts, which with a total of 533 watts, it exceeds the power consumption of a configuration with a GeForce GTX 1080 Ti. Therefore, it is desirable for AMD not only to improve the performance of its flagship, but also to try to make it more energy efficient. At the same time, we note the high efficiency of video cards based on AMD GPUs in 2D, regardless of the choice of the video card operating mode.

Conclusion

Of course, AMD Radeon RX Vega 64 turned out, if not promising, then at least a very interesting video card, even despite the shortage and seriously prolonged appearance of the original models. Yes, – in the reference version, it was difficult for her to compete with the huge range of original GeForce GTX 1080s on the market, especially since the price of the Radeon RX Vega 64 is higher, but now the situation is already changing for the better. And, judging by the messages in the forums, with fresh drivers AMD is gradually bringing up Vega’s performance in new games to the level of its main competitor, so it should be even more interesting further. However, it will not be so easy for most ordinary users to cope with the high level of power consumption of the AMD Radeon RX Vega 64, but this is, in principle, possible by lowering the voltage and further adjusting the video card. Without this, the Radeon RX Vega 64 in terms of power consumption loses even to the GeForce GTX 1080 Ti, which is much faster. Let’s hope that we will test AMD Radeon RX Vega 56 in the original version, on the newest drivers and in an updated game set.